We’ve been dreaming of robots for over a century, but are we any closer to having fully-automated robots? David Mizzi from THINK gets in touch with University of Malta (UM) alumnus and CEO and founder of Cyberselves, Daniel Camilleri, to talk robots.

Continue readingEnter the swarm

Author: Jean Luc Farrugia

Once upon a time, the term ‘robot’ conjured up images of futuristic machines from the realm of science fiction. However, we can find the roots of automation much closer to home.

Nature is the great teacher. In the early days, when Artificial Intelligence was driven by symbolic AI (whereby entities in an environment are represented by symbols which are processed by mathematical and logical rules to make decisions on what actions to take), Australian entrepreneur and roboticist Rodney Brooks looked to animals for inspiration. There, he observed highly intelligent behaviours; take lionesses’ ability to coordinate and hunt down prey, or elephants’ skill in navigating vast lands using their senses. These creatures needed no maps, no mathematical models, and yet left even the best robots in the dust.

This gave rise to a slew of biologically-inspired approaches. Successful applications include domestic robot vacuums and space exploration rovers.

Swarm Robotics is an approach that extends this concept by taking a cue from collaborative behaviours used by animals like ants or bees, all while harnessing the emerging IoT (Internet of Things) trend that allows technology to communicate.

Supervised by Prof. Ing. Simon G. Fabri, I designed a system that enabled a group of robots to intelligently arrange themselves into different patterns while in motion, just like a herd of elephants, a flock of birds, or even a group of dancers!

I built and tested my system using real robots, which had to transport a box to target destinations chosen by the user. Unlike previous work, the algorithms I developed are not restricted by formation shape. My robots can change shape on the fly, allowing them to adapt to the task at hand. The system is quite simple and easy to use.

The group consisted of three robots designed using inexpensive off-the-shelf components. Simulations confirmed that it could be used for larger groups. The robots could push, grasp, and cage objects to move them from point A to B. To cage an object the robots move around it to bind it, then move together to push it around. Caging proved to be the strongest method, delivering the object even when a robot became immobilised, though grasping delivered more accurate results.

Collective transportation can have a great impact on the world’s economy. From the construction and manufacturing industries, to container terminal operations, robots can replace humans to protect them from the dangerous scenarios many workers face on a daily basis.

This research project was carried out as part of the M.Sc. in Engineering (Electrical) programme at the Faculty of Engineering. A paper entitled “Swarm Robotics for Object Transportation” was published at the UKACCControl 2018 conference, available on IEEE Xplore digital library.

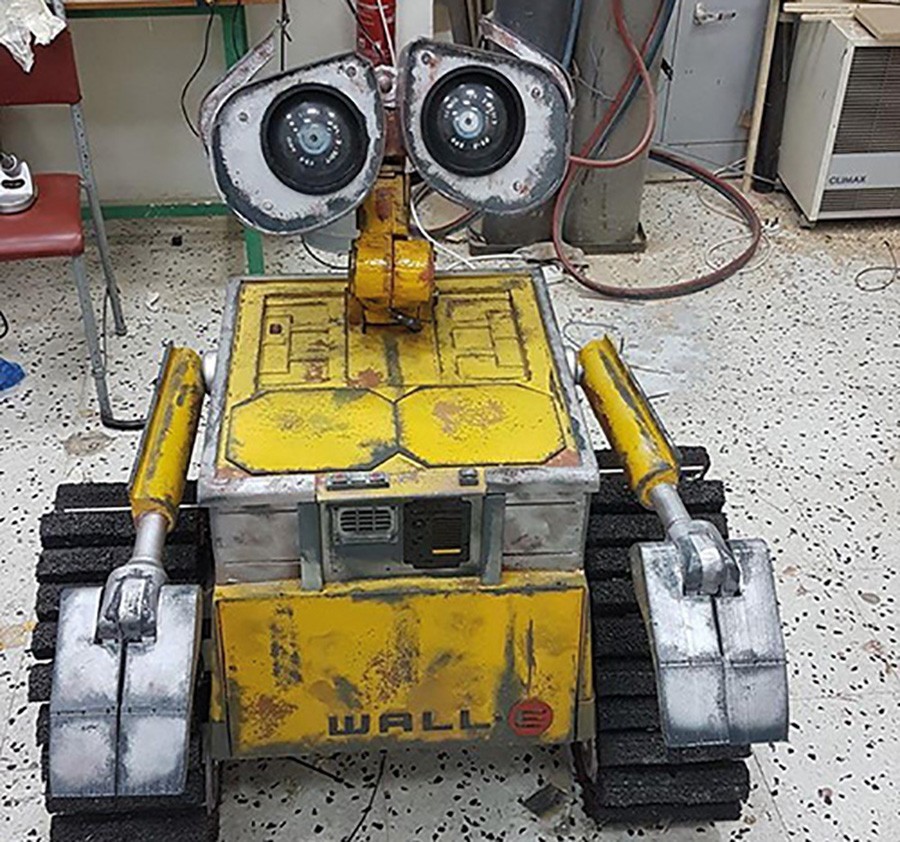

Wall-E: Ta-dah!

Think sci-fi, think robots. Whether benevolent, benign, or bloodthirsty, these artificially-intelligent automatons have long captured our imagination. However, thanks to recent advances in mechanical and programming technology, it looks like they are set to break the bonds of fiction.Continue reading

Robot see, robot maps

by Rachael N. Darmanin

The term ‘robot’ tends to conjure up images of well-known metal characters like C-3P0, R2-D2, and WALL-E. The robotics research boom has in the end enabled the introduction of real robots into our homes, workspaces, and recreational places. The pop culture icons we loved have now been replaced with the likes of robot vacuums such as the Roomba and home-automated systems for smoke detectors, or WIFI-enabled thermostats, such as the Nest. Nonetheless, building a fully autonomous mobile robot is still a momentous task. In order to purposefully travel around its environment, a mobile robot has to answer the questions ‘where am I?’, ‘where should I go next?’ and ‘how am I going to get there?’

Like humans, mobile robots must have some awareness of their surroundings in order to carry out tasks autonomously. A map comes in handy for humans. A robot could build the map itself while exploring an unknown environment—this is a process called Simultaneous Localisation and Mapping (SLAM). For the robot to decide which location to explore next, however, an exploration strategy would need to be devised, and the path planner would guide the robot to navigate to the next location, which increases the map’s size.

Rachael Darmanin (supervised by Dr Ing. Marvin Bugeja), used a software framework called Robot Operating System (ROS) to develop a robot system that can explore and map an unknown environment on its own. Darmanin used a differential-drive-wheeled mobile robot, dubbed PowerBot, equipped with a laser scanner (LIDAR) and wheel encoders. The algorithms responsible for localising the robot analyse the sensors’ data and construct the map. In her experiments, Darmanin implemented two different exploration strategies, the Nearest Frontier and the Next Best View, on the same system to map the Control Systems Engineering Laboratory. Each experiment ran for approximately two minutes until the robot finished its exploration and produced a map of its surroundings. This was then compared to a map of the environment to evaluate the robot’s mapping accuracy. The Next Best View approach generated the most accurate maps.

Mobile robots with autonomous exploration and mapping capabilities have massive relevance to society. They can aid hazardous exploration, like nuclear disasters, or access uncharted archaeological sites. They could also help in search and rescue operations where they would be used to navigate in disaster-stricken environments. For her doctorate, Darmanin is now looking into how multiple robots can work together to survey a large area—with a few other solutions in between.

This research was carried out as part of a Master of Science in Engineering, Faculty of Engineering, University of Malta. It was funded by the Master it! Scholarship Scheme (Malta). This scholarship is part-financed by the European Union European Social Fund (ESF) under Operational Programme II Cohesion Policy 2007–2013, Empowering People for More Jobs and a Better Quality Of Life.

Using Muscle Activity To Control Machines

Independent living is important to everyone. However, it is a known fact that there are many cases where physical problems prevent people from living without care. To help people regain some independence in their lives there are systems such as Human to Machine Interfaces (HMI). Systems such as these work by using biosignals like Electromyographic (EMG) signals that can be used to control assistive devices. However, some have their drawbacks: prosthetic arms, for instance, are one commonly used device that are at times abandoned due to a lack of dexterity and precision.

The problem is that most of these devices make use of sequential control, where only one function can be articulated at a time— meaning fluid, life-like motions are impossible. Now, most daily activities need simultaneous movement with multiple degrees of freedom. And it is this need that is pushing the creators of these devices to create simultaneous control to mimic real life movements.

Christian Grech (supervised by Dr Tracey Camilleri and co-supervised by Dr Ing. Marvin Bugeja) has developed a system which allows the control of the position of a robotic arm by using the muscle activity of a person. This consists of an HMI which continuously provides the shoulder and elbow joint positions using surface muscle movements. Grech tested the model to develop more freedom, which would lead to fluid movements. He investigated three types of system identification methods (state space models, linear regression models, and neural networks) to develop this relationship between muscle activity and corresponding joint angles. Additionally, seven different movements were tested in real-time using a robotic arm. Grech managed to develop a model that allows prosthetic arms to be used more naturally.

Of course, more research is needed to perfect this device. Ideally it would operate without delay and with minimal user discomfort. The Department of Systems & Control Engineering is carrying out more research to continue to improve the accuracy and robustness of such myoelectric (EMG) controlled devices.

This research was carried out as part of a Bachelor of Engineering degree at the Faculty of Engineering, University of Malta.

Hand pose replication using a robotic arm

Robotics is the future. Simple but true. Even today, they support us, make the products we need and help humans to get around. Without robots we would be worse off. Kirsty Aquilina (supervised by Dr Kenneth Scerri) developed a system where a robotic arm could be controlled just by using one’s hand.

The setup was fed images through a single camera. The camera was pointed towards a person’s hand that held a green square marker. The computer was programmed to detect the corners of the marker. These corners give enough information to figure out the hand’s posture in 3D. By using a Kalman Filter, hand movements are tracked and converted into the angles required by the robotic arm.

The robotic arm looks very different from a human one and has limited movement since it has only five degrees of freedom. Within these limitations, the robotic arm can replicate a person’s hand pose. The arm replicates a person’s movement immediately so that a person can easily make the robot move around quickly.Controlling robots from afar is essential when there is no prior knowledge of the environment. It allows humans to work safely in hazardous environments like bomb disposal, or when saving lives performing remote microsurgery. In the future, it could assist disabled people.

This research was performed as part of a Bachelor of Engineering (Honours) at the Faculty of Engineering.

A video of the working project can be found at: http://bit.ly/KkrF39