When it comes to technology’s advances, it has always been said that creative tasks will remain out of their reach. Jasper Schellekens writes about one team’s efforts to build a game that proves that notion wrong.

The murder mystery plot is a classic in video games; take Grim Fandango, L.A. Noire, and the epic Witcher III. But as fun as they are, they do have a downside to them—they don’t often offer much replayability. Once you find out the butler did it, there isn’t much point in playing again. However, a team of academics and game designers are joining forces to pair open data with computer generated content to create a game that gives players a new mystery to solve every time they play.

The University of Malta’s Dr Antonios Liapis and New York University’s Michael Cerny Green, Gabriella A. B. Barros, and Julian Togelius want to break new ground by using artificial intelligence (AI) for content creation.

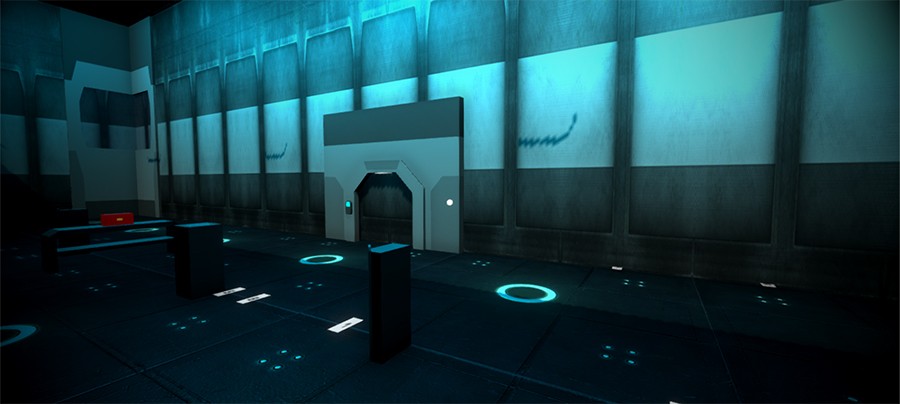

They’re handing the design job over to an algorithm. The result is a game in which all characters, places, and items are generated using open data, making every play session, every murder mystery, unique. That game is DATA Agent.

Gameplay vs Technical Innovation

AI often only enters the conversation in the form of expletives, when people play games such as FIFA and players on their virtual team don’t make the right turn, or when there is a glitch in a first-person shooter like Call of Duty. But the potential applications of AI in games are far greater than merely making objects and characters move through the game world realistically. AI can also be used to create unique content—they can be creative.

While creating content this way is nothing new, the focus on using AI has typically been purely algorithmic, with content being generated through computational procedures. No Man’s Sky, a space exploration game that took the world (and crowdfunding platforms) by storm in 2015, generated a lot of hype around its use of computational procedures to create varied and different content for each player. The makers of No Man’s Sky promised their players galaxies to explore, but enthusiasm waned in part due to the monotonous game play. DATA Agent learnt from this example. The game instead taps into existing information available online from Wikipedia, Wikimedia Commons, and Google Street View and uses that to create a whole new experience.

Data: the Robot’s Muse

A human designer draws on their experiences for inspiration. But what are experiences if not subjectively recorded data on the unreliable wetware that is the human brain? Similarly, a large quantity of freely available data can be used as a stand-in for human experience to ‘inspire’ a game’s creation.

According to a report by UK non-profit Nesta, machines will struggle with creative tasks. But researchers in creative computing want AI to create as well as humans can.

However, before we grab our pitchforks and run AI out of town, it must be said that games using online data sources are often rather unplayable. Creating content from unrefined data can lead to absurd and offensive gameplay situations. Angelina, a game-making AI created by Mike Cook at Falmouth University created A Rogue Dream. This game uses Google Autocomplete functions to name the player’s abilities, enemies, and healing items based on an initial prompt by the player. Problems occasionally arose as nationalities and gender became linked to racial slurs and dangerous stereotypes. Apparently there are awful people influencing autocomplete results on the internet.

DATA Agent uses backstory to mitigate problems arising from absurd results. A revised user interface also makes playing the game more intuitive and less like poring over musty old data sheets.

So what is it really?

In DATA Agent, you are a detective tasked with finding a time-traveling murderer now masquerading as a historical figure. DATA Agent creates a murder victim based on a person’s name and builds the victim’s character and story using data from their Wikipedia article.

This makes the backstory a central aspect to the game. It is carefully crafted to explain the context of the links between the entities found by the algorithm. Firstly, it serves to explain expected inconsistencies. Some characters’ lives did not historically overlap, but they are still grouped together as characters in the game. It also clarifies that the murderer is not a real person but rather a nefarious doppelganger. After all, it would be a bit absurd to have Albert Einstein be a witness to Attila the Hun’s murder. Also, casting a beloved figure as a killer could influence the game’s enjoyment and start riots. Not to mention that some of the people on Wikipedia are still alive, and no university could afford the inevitable avalanche of legal battles.

Rather than increase the algorithm’s complexity to identify all backstory problems, the game instead makes the issues part of the narrative. In the game’s universe, criminals travel back in time to murder famous people. This murder shatters the existing timeline, causing temporal inconsistencies: that’s why Einstein and Attila the Hun can exist simultaneously. An agent of DATA is sent back in time to find the killer, but time travel scrambles the information they receive, and they can only provide the player with the suspect’s details. The player then needs to gather intel and clues from other non-player characters, objects, and locations to try and identify the culprit, now masquerading as one of the suspects. The murderer, who, like the DATA Agent, is from an alternate timeline, also has incomplete information about the person they are impersonating and will need to improvise answers. If the player catches the suspect in a lie, they can identify the murderous, time-traveling doppelganger and solve the mystery!

De-mystifying the Mystery

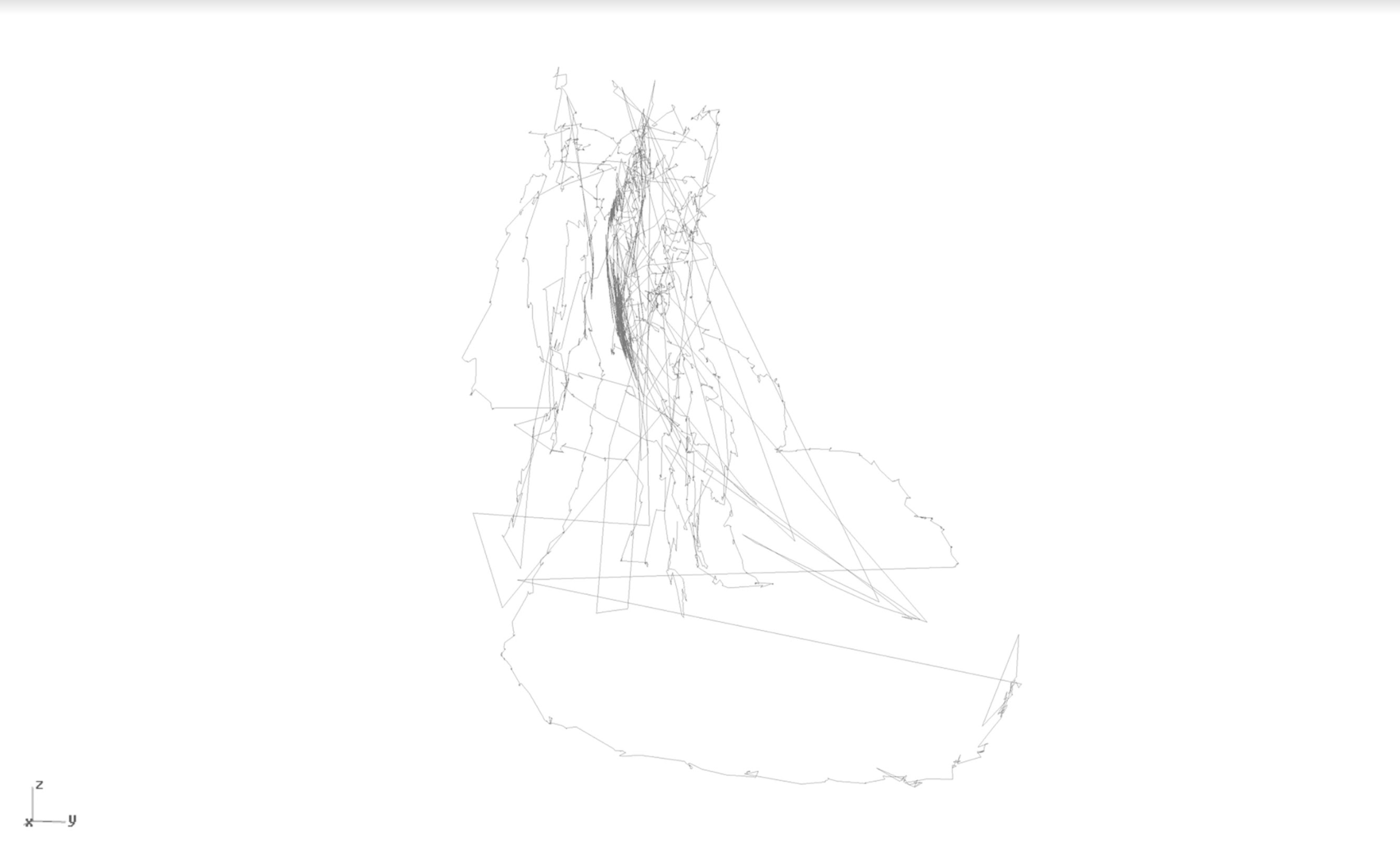

The murder mystery starts where murder mysteries always do, with a murder. And that starts with identifying the victim. The victim’s name becomes the seed for the rest of the characters, places, and items. Suspects are chosen based on their links to the victim and must always share a common characteristic. For example, Britney Spears and Diana Ross are both classified as ‘singer’ in the data used. The algorithm searches for people with links to the victim and turns them into suspects.

But a good murder-mystery needs more than just suspects and a victim. As Sherlock Holmes says, a good investigation is ‘founded upon the observation of trifles.’ So the story must also have locations to explore, objects to investigate for clues, and people to interrogate. These are the game’s ‘trifles’ and that’s why the algorithm also searches for related articles for each suspect. The related articles about places are converted into locations in the game, and the related articles about people are converted into NPCs. Everything else is made into game items.

The Case of Britney Spears

This results in games like “The Case of Britney Spears” with Aretha Franklin, Diana Ross, and Taylor Hicks as the suspects. In the case of Britney Spears, the player could interact with NPCs such as Whitney Houston, Jamie Lynn Spears, and Katy Perry. They could also travel from McComb in Mississippi to New York City. As they work their way through the game, they would uncover that the evil time-traveling doppelganger had taken the place of the greatest diva of them all: Diana Ross.

Oops, I learned it again

DATA Agent goes beyond refining the technical aspects of organising data and gameplay. In the age where so much freely available information is ignored because it is presented in an inaccessible or boring format, data games could be game-changing (pun intended).

In 1985, Broderbund released their game Where in the World is Carmen Sandiego?, where the player tracked criminal henchmen and eventually mastermind Carmen Sandiego herself by following geographical trivia clues. It was a surprise hit, becoming Broderbund’s third best-selling Commodore game as of late 1987. It had tapped into an unanticipated market, becoming an educational staple in many North American schools.

Facts may have lost some of their lustre since the rise of fake news, but games like Where in the World is Carmen Sandiego? are proof that learning doesn’t have to be boring. And this is where products such as DATA Agent could thrive. After all, the game uses real data and actual facts about the victims and suspects. The player’s main goal is to catch the doppelganger’s mistake in their recounting of facts, requiring careful attention. The kind of attention you may not have when reading a textbook. This type of increased engagement with material has been linked to improving information retention.In the end, when you’ve traveled through the game’s various locations, found a number of items related to the murder victim, and uncovered the time-travelling murderer, you’ll hardy be aware that you’ve been taught.

‘Education never ends, Watson. It is a series of lessons, with the greatest for the last.’ – Sir Arthur Conan Doyle, His Last Bow.