‘Fun, exciting and challenging’ is how Federica, NOVA logistics officer, described her undergraduate experience with UM’s Department of Digital Arts. Now in their final year, the Bachelor of Fine Arts cohort, guided by Dr Trevor Borg, is dotting all the i’s and crossing all their t’s in preparation for their thesis exhibition. THINK took the opportunity to talk with a few members of the logistics team to learn what NOVA is all about.

Continue readingCulinary Medicine: A Missing Ingredient in Medical Education

For her second-year physiology research project conducted under the supervision of Chev. Prof. Renald Blundell from UM’s Department of Physiology and Biochemistry, Courtney Ekezie focused on sustainable food systems and their impact on human health. The study briefly mentioned culinary medicine – an aspect that later inspired this article for THINK.

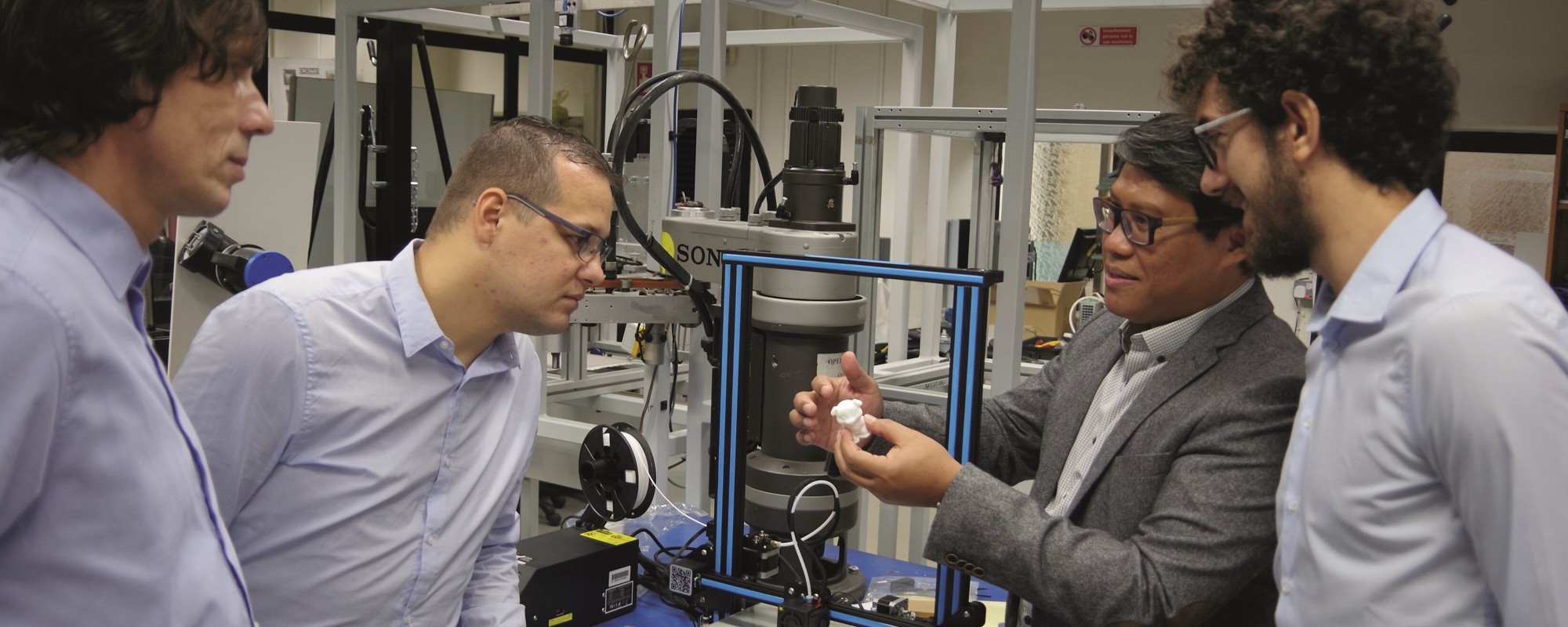

Continue reading3D Printing Balloons Inflates Enthusiasm for Future Technology

With the rise in popularity of 3D printing, the medium has become more accessible. What’s more, recent advancements in the field have made the technology rather intriguing. THINK explores the latest UM research on the development of 3D-printed balloons.

Continue readingGreen Walls: A Sustainable and Innovative System to Reduce the Impact of Pollution

In cities, pollution remains a pressing environmental concern for pedestrians. THINK sits down with Prof. Ing. Daniel Micallef and Dr Edward Duca from UM, who are using plants and physical barriers to combat traffic-generated pollution.

Continue readingTop 11 Real-Life AI Innovations Shaping Our World Today

Automating mundane tasks, incredible problem solving, and the wonder of creating smarter and smarter technology. What’s not to love about robots and AI? (Besides the whole losing our jobs thing). Check out our favourite 11 real-life AI and Robots below!

Continue readingInnovators play a different game

South Africa-based entrepreneur Rapelang Rabana says that when it comes to innovation, the skills and experiences we accumulate over our lifetime are better resources than formal qualifications. Words by Daiva Repeckaite.

Continue readingLasers bonding layers

fused filament fabrication, laser, Laser Engineering and Development Ltd., 3D printing, Iren Bencze, Gabor Molnar, Arif Rochman

Continue readingTo patent or not to patent?

As universities and research institutions look to protect the knowledge they develop, András Havasi questions time frames, limited resources, and associated risks.

The last decade has seen the number of patent applications worldwide grow exponentially. Today’s innovation- and knowledge-driven economy certainly has a role to play in this.

With over 21,000 European and around 8,000 US patent applications in 2018, the fields of medical technologies and pharmaceuticals—healthcare industries—are leading the pack.

Why do we need all these patents?

A patent grants its owner the right to exclude others from making, using, selling, and importing an invention for a limited time period of 20 years. What this means is market exclusivity should the invention be commercialised within this period. If the product sells, the owner will benefit financially. The moral of the story? A patent is but one early piece of the puzzle in a much longer, more arduous journey towards success.

Following a patent application, an invention usually needs years of development for it to reach its final product stage. And there are many ‘ifs’ and ‘buts’ along the way to launching a product in a market; only at this point can a patent finally start delivering the financial benefits of exclusivity.

Product development is a race against time. The longer the development phase, the shorter the effective market exclusivity a product will have, leaving less time to make a return on the development and protection costs. If this remaining time is not long enough, and the overall balance stays in the negative, the invention could turn into a financial failure.

Some industries are more challenging than others. The IT sector is infamous for its blink-and-you-miss-it evolution. The average product life cycle on software has been reduced from three–five years to six–12 months. However, more traditional sectors cannot move that quickly.

The health sector is one example. Research, development, and regulatory approval takes much longer, spanning an average of 12–13 years from a drug’s inception to it being released on the market, leaving only seven to eight years for commercial exploitation.

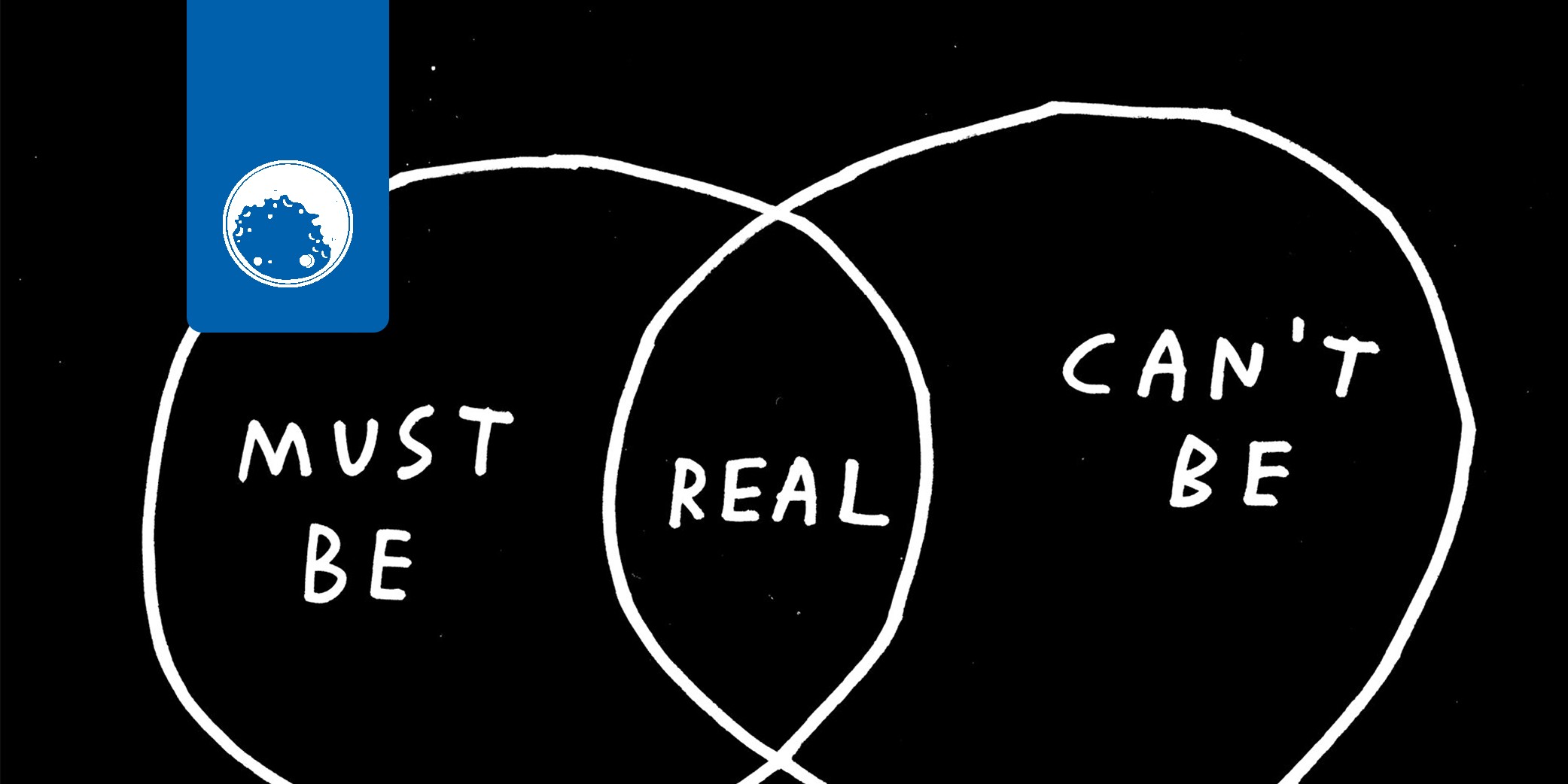

So the real value of a patent is the effective length of market exclusivity, factored in with the size of the market potential. Can exclusivity in the market give a stronger position and increase profits to make a sufficient return on investment? All this makes patenting risky, irrespective of the technological content—it is a business decision first and foremost.

Companies see the opportunity in this investment and are happy to take the associated risks. But why does a university bother with patents at all and what are its aims in this ‘game’?

Universities are hubs of knowledge creation and today’s economy sees the value in that. As a result, research institutions intend to use and commercialise their know-how. And patenting is an essential part of that journey.

The ultimate goal and value of a patent remains the same, however, it serves a different purpose for universities. Patents enable them to legally protect their rights to inventions they helped nurture and claim financial compensation if the invention is lucrative. At the same time, patent protection allows the researchers to freely publish their results without jeopardising the commercial exploitation of the invention. It’s a win-win situation. Researchers can advance their careers, while the university can do its best to exploit the output of their work, bolster its social impact, and eventually reinvest the benefits into its core activity: research.

At what price?

Patenting may start at a few hundred or thousand euros, but the costs can easily accumulate to tens or even hundreds of thousands over the years. However, this investment carries more risk for universities than for companies.

Risks have two main sources. Firstly, universities’ financial capabilities are usually more limited when compared to those of businesses. Secondly, universities are not the direct sellers of the invention’s eventual final product. For that, they need to find their commercial counterpart, a company that sees the invention’s value and commercial potential.

This partner needs to be someone who is ready to invest in the product’s development. This is the technology transfer process, where the invention leaves the university and enters the industry. This is the greatest challenge for university inventions. Again, here the issue of time raises its head. The process of finding suitable commercial partners further shortens the effective period of market exclusivity.

A unique strategy is clearly needed here. Time and cost are top priorities. All potential inventions deserve a chance, but risks and potential losses need to be minimised. It is the knowledge transfer office’s duty to manage this.

We minimise risks and losses by finding (or trying to find) the sweet spot of time frames with a commercial partner, all while balancing commercial potential and realistic expectations. The answer boils down to: do we have enough time to take this to market and can we justify the cost?

Using cost-optimised patenting strategy, we can postpone the first big jump in the costs to two and a half years. After this point, the costs start increasing significantly. The rule of thumb is that about five years into a patent’s lifetime the likelihood of licensing drops to a minimum. So on a practical level, a university invention needs to be commercialised very quickly.

Maintaining a patent beyond these initial years can become unfeasible, because even the most excellent research doesn’t justify the high patenting costs if the product is not wanted by industry. And the same applies for all inventions. Even in the health sector, despite product development cycles being longer, if a product isn’t picked up patents can be a huge waste of money.

Patenting is a critical tool for research commercialisation. And universities should protect inventions and find the resources to file patent applications. However, the opportunities’ limited lifetime cannot be ignored. A university cannot fall into the trap of turning an interesting opportunity into a black hole of slowly expiring hopes. It must be diligent and level-headed, always keeping an ear on the ground for the golden goose that will make it all worth it.

The unusual suspects

When it comes to technology’s advances, it has always been said that creative tasks will remain out of their reach. Jasper Schellekens writes about one team’s efforts to build a game that proves that notion wrong.

The murder mystery plot is a classic in video games; take Grim Fandango, L.A. Noire, and the epic Witcher III. But as fun as they are, they do have a downside to them—they don’t often offer much replayability. Once you find out the butler did it, there isn’t much point in playing again. However, a team of academics and game designers are joining forces to pair open data with computer generated content to create a game that gives players a new mystery to solve every time they play.

The University of Malta’s Dr Antonios Liapis and New York University’s Michael Cerny Green, Gabriella A. B. Barros, and Julian Togelius want to break new ground by using artificial intelligence (AI) for content creation.

They’re handing the design job over to an algorithm. The result is a game in which all characters, places, and items are generated using open data, making every play session, every murder mystery, unique. That game is DATA Agent.

Gameplay vs Technical Innovation

AI often only enters the conversation in the form of expletives, when people play games such as FIFA and players on their virtual team don’t make the right turn, or when there is a glitch in a first-person shooter like Call of Duty. But the potential applications of AI in games are far greater than merely making objects and characters move through the game world realistically. AI can also be used to create unique content—they can be creative.

While creating content this way is nothing new, the focus on using AI has typically been purely algorithmic, with content being generated through computational procedures. No Man’s Sky, a space exploration game that took the world (and crowdfunding platforms) by storm in 2015, generated a lot of hype around its use of computational procedures to create varied and different content for each player. The makers of No Man’s Sky promised their players galaxies to explore, but enthusiasm waned in part due to the monotonous game play. DATA Agent learnt from this example. The game instead taps into existing information available online from Wikipedia, Wikimedia Commons, and Google Street View and uses that to create a whole new experience.

Data: the Robot’s Muse

A human designer draws on their experiences for inspiration. But what are experiences if not subjectively recorded data on the unreliable wetware that is the human brain? Similarly, a large quantity of freely available data can be used as a stand-in for human experience to ‘inspire’ a game’s creation.

According to a report by UK non-profit Nesta, machines will struggle with creative tasks. But researchers in creative computing want AI to create as well as humans can.

However, before we grab our pitchforks and run AI out of town, it must be said that games using online data sources are often rather unplayable. Creating content from unrefined data can lead to absurd and offensive gameplay situations. Angelina, a game-making AI created by Mike Cook at Falmouth University created A Rogue Dream. This game uses Google Autocomplete functions to name the player’s abilities, enemies, and healing items based on an initial prompt by the player. Problems occasionally arose as nationalities and gender became linked to racial slurs and dangerous stereotypes. Apparently there are awful people influencing autocomplete results on the internet.

DATA Agent uses backstory to mitigate problems arising from absurd results. A revised user interface also makes playing the game more intuitive and less like poring over musty old data sheets.

So what is it really?

In DATA Agent, you are a detective tasked with finding a time-traveling murderer now masquerading as a historical figure. DATA Agent creates a murder victim based on a person’s name and builds the victim’s character and story using data from their Wikipedia article.

This makes the backstory a central aspect to the game. It is carefully crafted to explain the context of the links between the entities found by the algorithm. Firstly, it serves to explain expected inconsistencies. Some characters’ lives did not historically overlap, but they are still grouped together as characters in the game. It also clarifies that the murderer is not a real person but rather a nefarious doppelganger. After all, it would be a bit absurd to have Albert Einstein be a witness to Attila the Hun’s murder. Also, casting a beloved figure as a killer could influence the game’s enjoyment and start riots. Not to mention that some of the people on Wikipedia are still alive, and no university could afford the inevitable avalanche of legal battles.

Rather than increase the algorithm’s complexity to identify all backstory problems, the game instead makes the issues part of the narrative. In the game’s universe, criminals travel back in time to murder famous people. This murder shatters the existing timeline, causing temporal inconsistencies: that’s why Einstein and Attila the Hun can exist simultaneously. An agent of DATA is sent back in time to find the killer, but time travel scrambles the information they receive, and they can only provide the player with the suspect’s details. The player then needs to gather intel and clues from other non-player characters, objects, and locations to try and identify the culprit, now masquerading as one of the suspects. The murderer, who, like the DATA Agent, is from an alternate timeline, also has incomplete information about the person they are impersonating and will need to improvise answers. If the player catches the suspect in a lie, they can identify the murderous, time-traveling doppelganger and solve the mystery!

De-mystifying the Mystery

The murder mystery starts where murder mysteries always do, with a murder. And that starts with identifying the victim. The victim’s name becomes the seed for the rest of the characters, places, and items. Suspects are chosen based on their links to the victim and must always share a common characteristic. For example, Britney Spears and Diana Ross are both classified as ‘singer’ in the data used. The algorithm searches for people with links to the victim and turns them into suspects.

But a good murder-mystery needs more than just suspects and a victim. As Sherlock Holmes says, a good investigation is ‘founded upon the observation of trifles.’ So the story must also have locations to explore, objects to investigate for clues, and people to interrogate. These are the game’s ‘trifles’ and that’s why the algorithm also searches for related articles for each suspect. The related articles about places are converted into locations in the game, and the related articles about people are converted into NPCs. Everything else is made into game items.

The Case of Britney Spears

This results in games like “The Case of Britney Spears” with Aretha Franklin, Diana Ross, and Taylor Hicks as the suspects. In the case of Britney Spears, the player could interact with NPCs such as Whitney Houston, Jamie Lynn Spears, and Katy Perry. They could also travel from McComb in Mississippi to New York City. As they work their way through the game, they would uncover that the evil time-traveling doppelganger had taken the place of the greatest diva of them all: Diana Ross.

Oops, I learned it again

DATA Agent goes beyond refining the technical aspects of organising data and gameplay. In the age where so much freely available information is ignored because it is presented in an inaccessible or boring format, data games could be game-changing (pun intended).

In 1985, Broderbund released their game Where in the World is Carmen Sandiego?, where the player tracked criminal henchmen and eventually mastermind Carmen Sandiego herself by following geographical trivia clues. It was a surprise hit, becoming Broderbund’s third best-selling Commodore game as of late 1987. It had tapped into an unanticipated market, becoming an educational staple in many North American schools.

Facts may have lost some of their lustre since the rise of fake news, but games like Where in the World is Carmen Sandiego? are proof that learning doesn’t have to be boring. And this is where products such as DATA Agent could thrive. After all, the game uses real data and actual facts about the victims and suspects. The player’s main goal is to catch the doppelganger’s mistake in their recounting of facts, requiring careful attention. The kind of attention you may not have when reading a textbook. This type of increased engagement with material has been linked to improving information retention.In the end, when you’ve traveled through the game’s various locations, found a number of items related to the murder victim, and uncovered the time-travelling murderer, you’ll hardy be aware that you’ve been taught.

‘Education never ends, Watson. It is a series of lessons, with the greatest for the last.’ – Sir Arthur Conan Doyle, His Last Bow.

Accidental science

Do scientists need to have a clear end-goal before they dive down the research rabbit hole? Sara Cameron speaks to Dr André Xuereb about the winding journey that led to the unintended discovery of a new way to detect earthquakes.

Some of science’s greatest accomplishments were achieved when no one was looking with a purpose. When studying a petri dish of bacterial cultures, Alexander Fleming had no intention of discovering penicillin, and yet he changed the course of human history. Henri Becquerel was trying to make the most of dwindling sunlight to expose photographic plates using uranium when he stumbled upon radioactivity. A chance encounter between a chocolate bar in Percy Spencer’s pocket and the radar machine that melted it sparked the invention of the household microwave.

One would think that with this track record of coincidental breakthroughs, the field of science and research would continue to flourish by embracing curiosity and experimentation. But as interest piques and funding avenues pop up for researchers, there has been a shift in mindset.

Money changes things. And while it does allow people to work hard and answer more questions, it has also fostered expectations from stakeholders. Investors want fast results that will improve their business or product. We, the end-user, want to see our lives changed, one discovery at a ti me. We’re no longer satisfied with research for research’s sake. At least for the most part.

Quantum physicist Dr André Xuereb (Faculty of Science, University of Malta) is all too aware of this issue and its effects on scientific progress. Xuereb explains scientists’ frustration: ‘A lot of funding, in Malta and elsewhere, is dedicated to bringing mature ideas to the market, but that is the ti p of the iceberg. There is an entire innovation lifecycle that must be funded and sustained for good ideas to develop and eventually become technologies. The starting point is often an outlandish idea, and eventually, sometimes by accident, great new technologies are born,’ he says.

STARTING POINTS

Over the past few years, Xuereb has been exploring new possibilities in quantum mechanics.

The field of quantum mechanics attempts to explain the behaviour of atoms and what makes them. Its mathematical principles show that atoms and other particles can exist in states beyond what can be described by the physics of the ordinary objects that surround us. For example, quantum theorems that show objects existing in two places at once off er a scientific basis for teleportation.

Star Trek fans know exactly what we’re talking about, but for those rolling their eyes, the reality is that many things in our everyday lives wouldn’t exist without at least some understanding of quantum physics. Our computers, phones, GPS navigation, digital cameras, LED TV screens, and lasers are all products of the quantum revolution.

The starting point is often an outlandish idea, and eventually, sometimes by accident,

great new technologies are born

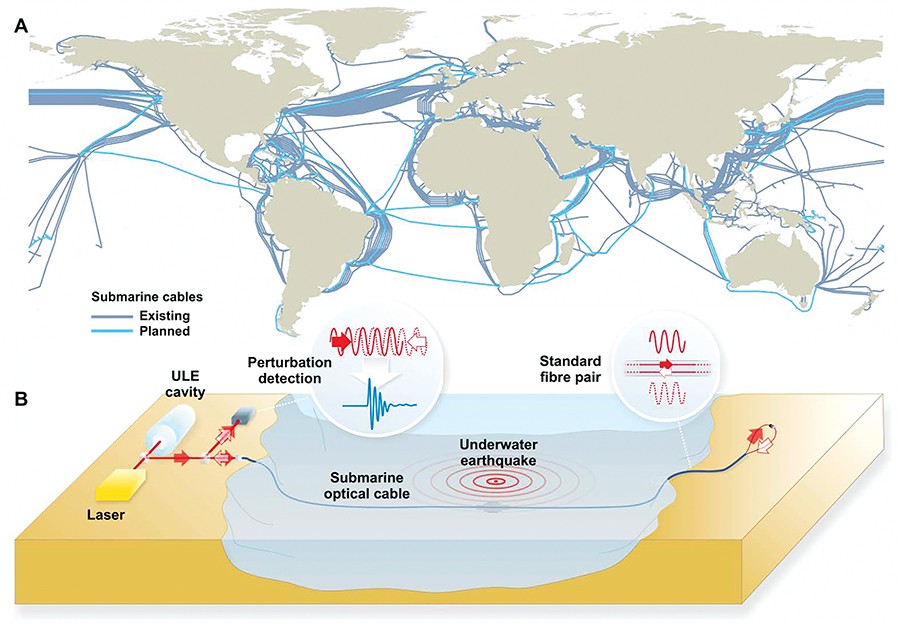

Another technology that has changed the way we live and work is modern telecommunications technology. When you pick up your phone to message a friend overseas, call a loved one, or email a colleague, telecoms networks spanning the earth carry the data across continents and under oceans through thousands of kilometres of optical fibres.

The 96-kilometre submarine telecommunication link between Malta and Sicily was Xuereb’s focus in 2015. He organised a team of European experts to begin investigating the potential for building a quantum link between the two countries.

The Austrian, Italian, and Maltese trio were particularly interested in a strange property called ‘entanglement.’ This is a curious property of quantum objects that can be created in pairs of photons, connecting them together. This entanglement can be distributed by giving one of these photons to a friend and keeping the other for yourself, establishing a quantum link between you and this friend—an invisible quantum ‘wire,’ so to speak.

Through this connection, you and your friend can send data faster than over ordinary connections; by modifying the state of the photon at your end, you can instantly affect the state of your friend’s photon, no matter how far apart you are in the universe. Using quantum links such as these, all manner of feats can be performed, including super-secure communications. ‘We wanted to demonstrate that quantum entanglement can be distributed using a 100km-long, established telecoms link, using what was already available, with no laboratory facilities in sight,’ explains Xuereb. His team also wanted to demonstrate that entanglement using polarisation of light was possible. Previously it was thought impossible in submarine conditions, even though it has some very technologically convenient properties.

Two years and several complex experiments later, Xuereb and his team have indeed proven the possibility of quantum communications over submarine telecommunication networks. And with one question answered, a slew more lifted their heads.

The Italian subteam, led by Davide Calonico (Istituto Nazionale di Ricerca Metrologica, INRIM), now turned their attention to a different set of questions for the Malta-Sicily telecommunication network.

MORE TO COME…

Atomic clocks keep the world ticking by providing precise timekeeping for GPS navigation, internet synchronisation, banking transactions, and particle science experiments. In all these activities, exact timing is essential.

These extremely accurate clocks use atomic oscillations as a frequency reference, giving them an average error of only one second every 100 million years. Connecting the world’s atomic clocks would create an international common time base, which would allow people to better synchronise their activities, even over vast distances. For example, bank transactions and trading could happen much faster than they do at present.

This can’t be done by bouncing signals off of spaceborne satellites, since tiny changes in the atmosphere or in satellite orbits can ruin the signal. This is where the fibre-optic network comes back into the picture. Researchers have recently been looking at the telecoms network as a way to make this synchronisation possible. Scientists can use an ultra-stable laser to shine a reference beam along these fibres. Monitoring the optical path and the phase of the optical signal of the beam can then allow them to compare and synchronise the clocks at both ends.

Whilst Calonico and his team were testing this idea on the submarine network between Malta and Sicily, a few thousand kilometres away, meteorology expert Dr Giuseppe Marra was monitoring an 80km link in England. On October 2016, everything changed. One night, he noticed some noise in his data. Unable to attribute the noise to misbehaving equipment or a monitoring malfunction, his gut told him to turn to the news from his home country, Italy. There, he saw that the town of Amatrice had been devastated by an earthquake of 5.9 magnitude.

Further testing confirmed that the waveforms Marra saw in the fibre data matched those recorded by the British Geological Survey during the earthquake. His system even recorded quakes as far away as New Zealand, Mexico and Japan. This was huge news.

In simple terms, the seismic waves from an earthquake tremor cause a series of very slight expansions and contractions in fibre-optic cables, which in turn modify the phase of the cable’s reference beam. These tiny disturbances can be captured by specialised measurement tools at the ends of the cable, capable of detecting changes on the scale of femtoseconds: a millionth of a billionth of a second.

The majority of seismometers are land-based and so small that earthquakes more than a few hundred kilometres from the coast go undetected. Conventional seismometers designed to monitor the seabed are expensive and don’t usually monitor underwater seismic activity in real time. Telecoms networks could offer a solution that would allow us to observe and understand seismic activity in the world’s vast oceans. They would open up a new window through which to observe the processes taking place underneath Earth’s surface, teaching us more about how our planet works. In future, it may even make it possible to detect large earthquakes that cause untold devastation earlier.

The beauty of this discovery is that the infrastructure already exists. No new work is needed. All that is required is to set up lasers at either end of these cables, using up a tiny portion of a cable’s bandwidth without interfering with its use.

THREADS COMING TOGETHER

Marra got together with Xuereb and Calonico, who were already working on the undersea network between Malta and Sicily, to conduct some initial tests. The underwater trial, published in the world-leading journal Science this year along with the terrestrial results, was able to detect a weak tremor of 3.4 magnitude off Malta’s coast. Its epicentre was 89km from the cable’s nearest point, which reinforced the idea that cables can be used as a global seismic detector. ‘We would be able to monitor in real time tiny vibrations all over the planet. This would turn the existing network into a microphone for the Earth,’ Xuereb explains.

If we don’t fund the initial few steps of the innovation lifecycle,

how will we ever develop new technologies?

The system hasn’t been tested on an ocean cable. An interesting target would be a cable that crosses the mid-Atlantic ridge, where the drifting of Eurasian and African tectonic plates creates an area of high seismic activity. Based on the results so far and on conservative assumptions, trials are being planned for the near future on a larger scale, which will give us a better idea of the possibilities.

FURTHER DOWN THE RABBIT HOLE…

In many ways, it is understandable that agencies that fund science favour smaller, more goal-driven research programmes. They seek tangible results in a timely manner to reap quick rewards. But as this story goes to show, a change in mentality is needed.

‘If we don’t fund the initial few steps of the innovation lifecycle, how will we ever develop new technologies? This is a problem that affects scientists from many countries and comes from a mismatch in timescales. A year is a long time in politics, but a decade is often a short time in science,’ Xuereb comments.

Innovation has to start from somewhere, and it often starts from ideas which may have no apparent relevance to our everyday lives. We need to support researchers by keeping an open mind to unknown long-term possibilities—or the world might not only miss the next earthquake but also the next life-changing discovery.