INDIE GAMES

How can a video game ask questions about life, art, and frustration? Giuliana Barbaro-Sant met up with Dr Pippin Barr to tell us about his game adaptation of Marina Abramović‘s artwork The Artist is Present.

In each creative act, a personal price is paid. When the project you have been working on so hard falls to pieces because of funding, it is hard to accept its demise. The feeling of failure, betrayal, and loneliness is an easy trap to fall into. This is the independent game maker’s industry: a bloodthirsty world rife with competition, sucking pockets dry from the very beginning of the creative process.

Maltese game makers face a harsher reality. Not all game makers are lucky enough to make it to the finish line, publish, and make good money. Rather, most of them rarely do. Yet, if and when they get there, it is often thanks to the passion and dedication they put into their creation — together with the continuous support of others.

Dr Pippin Barr always had a passion for making things, be it playing with blocks or doodling. His time lecturing at the Center for Computer Game Research at the IT University of Copenhagen, together with his recent team-up with the newly opened Institute of Digital Games at the University of Malta, only served to reincarnate another form of this passion: Pippin makes games. At the Museum of Modern Art in New York he exhibited his most well known work: the game rendition of Marina Abramović’s The Artist is Present. He thought of the idea while planning to deliver lectures about how artists invoke emotions through laborious means in their artworks. In The Artist is Present, artist Marina Abramović sits still in front of hundreds of thousands of people and just stares into their eyes for as long as participants desire.

There is more to this performance than meets the eye. Beyond the simplistic façade, Barr saw real depth. Through eye contact, the artist and audience forge a unique connection. All barriers drop, and human emotion flows with a great rawness that games are so ‘awful’ at embodying. Yet, paradoxically, there is a militariness in the preparation behind the performance that games embrace only too well. Not only does the artist have to physically programme herself to withstand over 700 hours’ worth of performing, but the audience also prepares for the experience in their own way, by disciplining themselves as they patiently wait for their turn.

“It’s a pretty lonely road and it can be tough when you’re stuck with yourself”

Pippin Barr

‘Good research is, after all, creative,’ according to Pippin Barr. By combining his academic background with his creative impulse, he made an art game — a marriage between art and video games. These are games about games, which test their values and limits. Barr relishes the very idea of questioning the way things work. His self-reflexive games serve as a platform for him to call into question life’s so-called certainties, in a way that is powerful enough to strike a chord in both himself and the player. He is looking to create a deep emotional resonance, which gives the player a chance to ‘get’ the game through a unique personal experience. Sometimes, players write about his games and capture what Pippin Barr was thinking about, as he put it, ‘better than I could myself’, or read deeper than his own thoughts.

As far as gameplay goes, The Artist is Present is fairly easy to manoeuvre in. The look is fully pixellated yet captures the ambience at the Museum. The first screen of the game places the player in front of its doors and you are only allowed in if you are playing the game during the actual exhibition’s opening hours in America. Until then, there is no option but to wait till around 4:30 pm our time (GMT+1). The frustration continues increasing since after entering you will still have to wait behind a long queue of strangers to experience the performance work. This reflects real world participants who had to wait to experience The Artist is Present. If they were lucky, they sat in front of the artist and gazed at her for as long as they wanted.

Interestingly, Marina Abramović also played the game. She told Barr about how she was kicked out of the queue when she tried to catch a quick lunch in the real world as she was queuing in the digital one. Very unlucky, but the trick is to keep the game tab open. Other than that, good luck!

Despite that little hiccup, Abramović did not give up on the concept of digitalising the experience of her art. After The Artist is Present, Barr and Abramović set forth on a new quest: the making of the Digital Marina Abramović Institute. Released last October, it has proven to be a great challenge for those who cannot help but switch windows to check up on their Facebook notifications – not only are the instructions in a scrolling marquee, but you have to keep pressing the Shift button on your keyboard to prove you are awake and aware of what is happening in the game. It is the same kind of awareness that is expected out of the physical experience of the real-life Institute.

The quirkiness of Barr’s games reflects their creator. Besides The Artist is Present, in Let’s Play: Ancient Greek Punishment, he adapted a Greek Sisyphus myth to experiment with the frustration of not being rewarded. In Mumble Indie Bungle, he toyed with the cultural background of indie game bundles by creating ‘terrible’ versions with ‘misheard titles’ (and so, ‘misheard’ game concepts) of renowned indie games. One of his 2013 projects involves the creation of an iPhone game, called Snek, an adaptation of the good old Nokia 3310 Snake. In his version, Pippin Barr turned the effect of the smooth ‘naturally’ perfect touch interface of the device upon its head, by using the gyroscope feature. Instead, the interaction with the Apple device becomes thoroughly awkward, as the player has to move around very unnaturally because of the requirements of the game.

This dedicated passion for challenging boundaries ultimately drives creators and artists alike to step out of their comfort zone and make things. These things challenge the way society thinks and its value systems. Game making is no exception, especially for independent developers. An artist yearns for the satisfaction that comes with following a creative impulse and succeeding. In Barr’s case, being ‘part of the movement to expand game boundaries and show players (and ourselves) that the possibilities for what might be “allowed” in games is extremely broad.’

Accomplishing so much, against the culture industry’s odds, is a great triumph for most indie developers. For Pippin Barr, the real moment of success is when the game is finished and is being played. Then he knows that someone sat with the game and actually had an experience — maybe even ‘got it’.

Follow Pippin Barr on Twitter: @pippinbarr or on: www.pippinbarr.com

Giuliana Barbaro-Sant is part of the Department of English Master of Arts programme.

An Intelligent Pill

Doctors regularly need to use endoscopes to take a peek inside patients and see what is wrong. Their current tools are pretty uncomfortable. Biomedical engineer Ing. Carl Azzopardi writes about a new technology that would involve just swallowing a capsule.

Michael* lay anxiously in his bed, looking up at his hospital room ceiling. ‘Any minute now’, he thought, as he nervously awaited his parents and doctor to return. Michael had been suffering from abdominal pain and cramps for quite some time. The doctors could not figure it out through simple examinations. He could not take it any more. His parents had taken him to a gut specialist, a gastroenterologist, who after asking a few questions, had simply suggested an ‘endoscopy’ to examine what is wrong. Being new to this, Michael had immediately gone home to look it up. The search results did not thrill him.

The word ‘endoscope’ derives from the Greek words ‘endo’, inside, and ‘scope’, to view. Simply put, looking inside our body using instruments called endoscopes. In 1804, Phillip Bozzini created the first such device. The Lichtleiter, or light conductor, used hollow tubes to reflect light from a candle (or sunlight) onto bodily openings — rudimentary.

Modern endoscopes are light years ahead. Constructed out of sleek, black polyurethane elastometers, they are made up of a flexible ‘tube’ with a camera at the tip. The tubes are flexible to let them wind through our internal piping, optical fibers shine light inside our bodies, and since the instrument is hollow it allows forceps or other instruments to work during the procedure. Two of the more common types of flexible endoscopes used nowadays are called gastroscopes and colonoscopes. These are used to examine your stomach and colon. As expected, they are inserted through your mouth or rectum.

Michael was not comforted by such advancements. He was not enticed by the idea of having a flexible tube passed through his mouth or colon. The door suddenly opened. Michael jerked his head towards the entrance to see his smiling parents enter. Accompanying them was his doctor holding a small capsule. As he handed it over to Michael, he explained what he was about to give him.

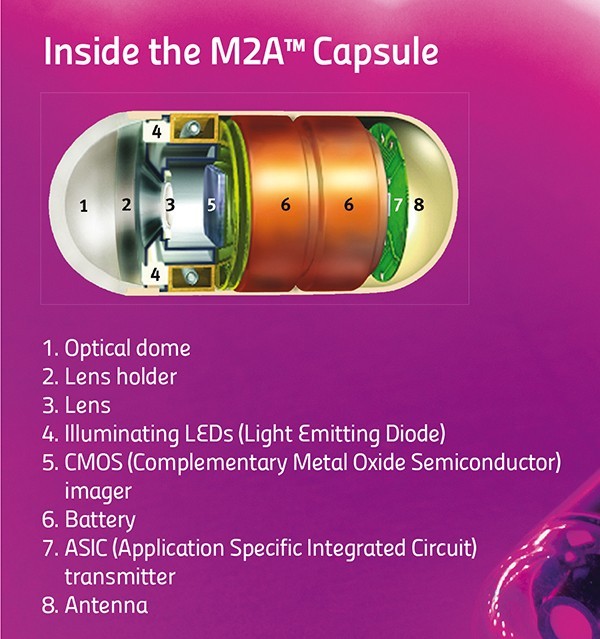

Enter capsule endoscopy. Invented in 2000 by an Israeli company, the procedure is simple. The patient just needs to swallow a small capsule. That is it. The patient can go home, the capsule does all the work automatically.

The capsule is equipped with a miniature camera, a battery, and some LEDs. It starts to travel through the patient’s gut. While on its journey it snaps around four to thirty-five images every second. Then it transmits these wirelessly to a receiver strapped around the patient’s waist. Eventually the patient passes out the capsule and on his or her next visit to the hospital, the doctor can download all the images saved on the receiver.

The capsule sounds like simplicity itself. No black tubes going down patients’ internal organs, no anxiety. Unfortunately, the capsule is not perfect.

“The patient just needs to swallow a small capsule. That is it. The patient can go home, the capsule does all the work automatically”

First of all, capsule endoscopy cannot replace flexible endoscopes. The doctors can only use the capsules to diagnose a patient. They can see the pictures and figure out what is wrong, but the capsule has no forceps that allow samples to be taken for analysis in a lab. Flexible endoscopes can also have cauterising probes passed through their hollow channels, which can use heat to burn off dangerous growths. The capsule has no such means. The above features make gastroscopies and colonoscopies the ‘gold standard’ for examining the gut. One glaring limitation remains: flexible endoscopes cannot reach the small intestine, which lies squarely in the middle between the stomach and colon. Capsule endoscopy can examine this part of the digestive tract.

First of all, capsule endoscopy cannot replace flexible endoscopes. The doctors can only use the capsules to diagnose a patient. They can see the pictures and figure out what is wrong, but the capsule has no forceps that allow samples to be taken for analysis in a lab. Flexible endoscopes can also have cauterising probes passed through their hollow channels, which can use heat to burn off dangerous growths. The capsule has no such means. The above features make gastroscopies and colonoscopies the ‘gold standard’ for examining the gut. One glaring limitation remains: flexible endoscopes cannot reach the small intestine, which lies squarely in the middle between the stomach and colon. Capsule endoscopy can examine this part of the digestive tract.

A second issue with capsules is that they cannot be driven around. Capsules have no motors. They tend to go along for the ride with your own bodily movements. The capsule could be pointing in the wrong direction and miss a cancerous growth. So, the next generation of capsules are equipped with two cameras. This minimises the problem but does not solve it completely.

The physical size of the pill makes these limitations hard to overcome. Engineers are finding it tricky to include mechanisms for sampling, treatment, or motion control. On the other hand, solutions to a third problem do exist. This difficulty relates to too much information. The capsule captures around 432,000 images over the 8 hours it snaps away. The doctor then needs to go through nearly all of these images to spot the problematic few. A daunting task that uses up a lot of time, increasing costs, and makes it easier to miss signs of disease.

A smart solution lies in looking at image content. Not all images are useful. A large majority are snapshots of the stomach uselessly churning away, or else of the colon, far down from the site of interest. Doctors usually use capsule endoscopy to check out the small intestine. Medical imaging techniques come in handy at this point to distinguish between the different organs. Over the last year, the Centre for Biomedical Cybernetics (University of Malta) has carried out collaborative research with Cardiff University and Saint James Hospital to develop software which gives doctors just what they need.

Following some discussions between these clinicians and engineers they quickly realised that images of the stomach and large intestine were mostly useless for capsule endoscopes.

Identifying the boundaries of the small intestines and extracting just these images would simplify and speed up screening. The doctor would just look at these images, discarding the rest.

Engineers Carl Azzopardi, Kenneth Camilleri, and Yulia Hicks developed a computer algorithm that could first and foremost tell the difference between digestive organs. An algorithm is a bit of code that performs a specific task, like calculating employees’ paychecks. In this case, the custom program developed uses image-processing techniques to examine certain features of each image, such as colour and texture, and then uses these to determine which organ the capsule is in.

Take colours for instance. The stomach has a largely pinkish hue, the small intestine leans towards yellowish tones, while the colon (unsurprisingly perhaps) changes into a murky green. Such differences can be used to classify the different organs. Additionally, to quickly sort through thousands of images, the images need to be compacted. A specific histogram is used to amplify differences in colour and compress the information. These procedures make it easier and quicker for algorithm image processing.

Texture is another unique organ quality. The small intestine is covered with small finger-like projections called villi. The projections increase the surface area of the organ, improving nutrient absorption into the blood stream. These villi give a particular ‘velvet-like’ texture to the images, and this texture can be singled out using a technique called Local Binary Patterns. This works by comparing each pixel’s intensity to its neighbours’, to determine whether these are larger or smaller in value than its own. For each pixel, a final number is then worked out which gauges whether an edge is present or not (see image).

Classification is the last and most important step in the whole process. At this point the software needs to decide if an image is part of the stomach, small intestine, or large intestine. To help automatically identify images, the program is trained to link the factors described above with the different organ types by being shown a small subset of images. This data is known as the training set. Once trained, the software can then automatically classify new images from different patients on its own. The software developed by the biomedical engineers was tested first by classification based just on colours or texture, then testing both features together. Factoring both in gave the best results.

“The software is still at the research stage. That research needs to be turned into a software package for a hospital’s day-to-day examinations”

After the images have been labeled, the algorithm can draw the boundaries between digestive organs. With the boundaries in place, the specialist can focus on the small intestine. At the press of a button countless hours and cash are saved.

The software is still at the research stage. That research needs to eventually be turned into a software package for a hospital’s day-to-day examinations. In the future, the algorithm could possibly be inserted directly onto the capsule. An intelligent capsule would be born creating a recording process capable of adapting to the needs of the doctor. It would show them just what they want to see.

Ideally the doctor would have it even easier with the software highlighting diseased areas automatically. The researchers at the University of Malta want to start automatically detecting abnormal conditions and pathologies within the digestive tract. For the specialist, it cannot get better than this.

The result? A shorter and more efficient screening process that could turn capsule endoscopy into an easily accessible and routine examination. Shorter specialist screening times would bring down costs in the private sector and lessen the burden on public health systems. Michael would not need to worry any longer; he’d just pop a pill.

* Michael is a fictitious character

[ct_divider]

The author thanks Prof. Thomas Attard and Joe Garzia. The research work is funded by the Strategic Educational Pathways Scholarship (Malta). The scholarship is part-financed by the European Union — European Social Fund (ESF) under Operational Programme II — Cohesion Policy 2007–2013, ‘Empowering People for More Jobs and a Better Quality of Life’

Time to buy a smart watch?

Just a few years back, mobile phones could make and receive a call, store a few numbers, and that’s it. That’s all they could do. Over the last few years, phones have grown ‘smarter’; they can surf the web, take photos, keep up-to-date on Facebook and Twitter, play games and music, read books and much much more.

Many argue that our watches are next in line for such a transformation. And considering the excitement brought about by the recent announcements of the smartwatch from Samsung, the Galaxy Gear, few will argue against that. Samsung is not the only player vying for the big potential return of smartwatches. Another heavyweight in the technology business, Sony, has been on board for a few years and have just announced their SmartWatch2.

Many small start-ups have also joined the furore delivering watches such as the Pebble, the Martian Passport, the Kreyos Meteor, the Wimm One, the Strata Stealth and the rather unimaginatively named: I’m watch.

All these smartwatches provide basic features such as instant notifications of incoming calls, smses, facebook updates, and tweets through a bluetooth connection with a paired phone. They often also allow mail reading and music control.

With so many players and no clear winner, the technology still needs to mature. Sony and Samsung use colour LED-based displays. Their setbacks are poor visibility in direct sunlight and a weak one-day battery life. Others use electronic ink, the same screen as e-readers, with excellent visibly and much improved battery life, sadly in black and white or limited colour.

User interaction also varies. While the Pebble and the Meteor favour a button-based interface, all other players utilise touch and voice control.

The differences do not stop there. Not all watches are waterproof – and do you really want to be taking off your watch every time you wash your hands? Also, some watches, like the I’m watch, provide a platform for app development, with new apps available for download every day.

One big player is still missing. Rumours of Apple’s imminent entry into the smartwatch business have been circling for a couple of years.

While guessing Apple’s watch name is easy — the iWatch, the technology has been kept under covers. As with other Apple products, their watch will not be first to market. Are they again waiting for the technology to evolve enough to bring out another game changer like the iPod, the iPhone, and more recently, the iPad? Only time will tell.

My biggest problem with any smartwatch available is that none seem truly ‘smart’. Smartwatches seem like little dumb accessories to their smart big brothers — the phones. I am waiting for a watch to become smart enough to replace my phone before jumping on the smartwatch bandwagon.

Valletta’s Digital Layer

Dérive Valletta is an initiative by digital art student Matthew Mamo (supervised by Dr Vince Briffa) aimed at increasing the visibility of our capital city’s museums and cultural institutions using augmented reality.

Augmented reality has a host of possibilities to allow people to interact with art and through this art the city itself. Inspired by the work of Israeli artist Yaacov Agam, the digital visuals featured in Dérive Valletta require the user to move around the objects being scanned in order to view the content.

Possessing its own cohesive brand and identity, this initiative is ultimately intended to contribute towards the creation of a digital cultural infrastructure within Valletta prior to 2018. Being a digital layer laid over the real world there will be no negative impact on this UNESCO World Heritage Site’s unique built environment.

The brand’s aesthetics were kept minimalistic to create an identity that can be incorporated into Valletta in an unobtrusive manner while endowing the initiative with a contemporary image. Minimalism is reflected in the restrained colour scheme and use of clean sans-serif typefaces.

The research was undertaken as part fulfilment of an MFA in Digital Arts and partially funded by the Strategic Educational Pathways Scholarship (Malta). This Scholarship is part-financed by the European Union — European Social Fund (ESF) under Operational Programme II — Cohesion Policy 2007–2013, “Empowering People for More Jobs and a Better Quality Of Life”.

Power of the Wind

My passion for renewable energies was sparked off during my undergraduate studies in Mechanical Engineering at the University of Malta. Thanks to ERASMUS, I studied at the University of Strathclyde which had a Renewable Energy course that, at the time, was not offered in Malta.

My passion for renewable energies was sparked off during my undergraduate studies in Mechanical Engineering at the University of Malta. Thanks to ERASMUS, I studied at the University of Strathclyde which had a Renewable Energy course that, at the time, was not offered in Malta.

I spent the last year of my bachelor studies designing and testing part of a wind tunnel to simulate atmospheric wind conditions. This test setup allowed for more realistic wind turbine experiments than previous efforts.

Although I wanted to further my career in wind energy, I opted first to broaden my knowledge in the field of renewables by enrolling for the Masters in Sustainable Energy Technology at Delft University of Technology in the Netherlands in August 2010.

Over the first year, I worked on several projects. They included designing a smart grid which was presented at the European Joint Research Centre (JRC). I also helped develop an innovative thermal energy plant that exploits temperature differences between the ocean surface and deep-water (>1km deep) in tropical waters to generate electricity.

Over the second year, I again carried out research in wind energy. At the famed Wind Energy Research Institute of Delft University called DUWIND, I looked into the effect wind turbines can have on each other. When wind turbine blades cut through the wind they can change its direction. This can reduce the efficiency of nearby wind turbines making them produce less energy. My results showed that a turbine’s effect on nearby systems diminishes when the wind distortion it causes is limited either by the wind’s inherent instability or other by properties like its proximity to the ground. By exploiting these wind qualities, a wind farm’s efficiency can be improved by up to 15%.

After my Masters I worked for a year at Eindhoven as a flow and thermal analyst at Segula Technologies Consultancy. I developed new components for a company’s cutting edge lithography machines and for fuel cell system development for BOSAL engineering. Now I have managed to secure a Ph.D. scholarship in wind turbine blade aerodynamics, continuing the work I started in my Masters at DUWIND. This time I am looking into the influence of small flow control devices on the performance of large (10 MW) wind turbines.

Baldacchino was awarded a STEPS scholarship for his Masters studies, which is part-financed by the EU’s European Social Fund under Operational Programme II — Cohesion Policy 2007–2013.

Transport 2025

Emmanuel Francalanza imagines how Malta’s transport system might look in 2025. Illustrations by Sonya Hallett

Will Love Tear Us Apart

Have there been any studies to modify the domestic refrigerator into part fridge and part air conditioning unit?

Asked by Tony Bugeja

Immersive 3D Experience

Comfortably sitting in seat 3F, John is watching one of his favourite operas. This close he can see all the details of the set, costumes, and the movements of the music director as he skilfully conducts the orchestra by careful gestures of his baton. He is immersed in the scene, capturing all the details. Then all of a sudden, the doorbell rings. Annoyed, John has to stop the video to see who it is. This could be the mainstream TV experience of the future.

This scene is called free-viewpoint technology that is part of my research at the University of Malta (UoM). Free-viewpoint television allows the user to select a view from which to watch the scene projected on a 3D television. The technology will allow the audience to change their viewpoint when they want, to where they want to be. By moving a slider or by a hand gesture, the user can change perspective, which is an experience currently used in games with their synthetically generated content — synthetically generated by a computer game’s graphics engine.

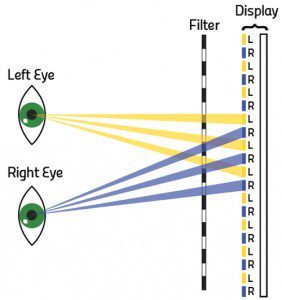

“For free-viewpoint to work, a scene needs to be captured using many cameras”

Today we are used to seeing a single viewpoint. If there are multiple perspectives we usually don’t have any control over them. Free-viewpoint technology will turn this idea on top of its head. The technology is expected to hit the market in the near future, with some companies and universities already experimenting with content and displays. New auto-stereoscopic displays do not need glasses (pictured next page), these displays ‘automatically’ generate a 3D image depending on which angle you view them. A clear example was the promise made by Japan to deliver 3D free-viewpoint coverage of all football games as part of their bid to host the FIFA World Cup in 2022. The bid was unsuccessful, which might delay the technology by a few years.

Locally, my research (and that of my team) deals with the transmission side of the story (pictured). For free-viewpoint to work, a scene needs to be captured using many cameras. The more cameras there are, the more freedom the user has to select the desired view. So many cameras create a lot of data. All the data captured by the cameras has to be transmitted to a 3D device into people’s homes, smartphones, laptops and so on. This transmission needs to pass over a channel, and whether it is fibre cable or wireless, it will always have a limited capacity. Data transmission also costs money. High costs would keep the technology out of our devices for decades.

My job is to make a large amount of data fit in smaller packages. To fit video in a channel we need to compress it. Current transmission of single view video also uses compression to save space on the channel so that more data can be transmitted and save on price. Note that, for example for high definition we have 24 bits per pixel and an image contains 1280 by 720 pixels (720p HD standard), that’s nearly 100,000 pixels for every frame. Since video is around 24-30 frames per second the amount of data being transmitted every minute starts escalating to unfeasible amounts.

double 3d / 3d squared

my first attempt at an anaglyph photo of a paper structure. you will need those red/blue glasses to view it properly.

Free-viewpoint technology would be another big leap in size. Each camera would be sending their own video, which is the same amount of data as we are now getting. If there are ten cameras, you would need to increase channel size by a factor of ten. This makes it highly expensive and unfeasible. For the example above, the network operator needs ten times more space on the network to get the service to your house, making it ten times more expensive than single view. Therefore, research is needed to drastically reduce the amount of data that needs to be transmitted while still keeping high quality images. These advances will make the technology feasible, cheaper, and available for all.

So the golden question is, how are we going to do that? Research, research, and more research. The first attempts by the video research community to solve this problem were to use its vast knowledge of single view transmission and extend it to the new paradigm. Basic single view algorithms (an algorithm is computer code that can perform a specific function, like Google’s search engine) compress video by searching through the picture and finding similarities in space and in time. Then the algorithms send the change, or the error vector, instead of the actual data. The error vector is a measure of imperfections and how it is used by computer scientists to compress data is explained below.

First let us look at the space component. When looking at a picture, it is quite clear that some areas are very similar. The similar areas can be linked and the data grouped together into one reference point. The reference point has to be transmitted with a mathematical representation (vector) that explains to the computer which areas are similar to each other. This reduces the amount of data that needs to be sent.

Secondly, let us analyse the time aspect. Video is a set of images placed one after another and run at 25 or 30 frames per second that gives the illusion of movement and action. To make a video flow seamlessly images that are right after each other are very similar. If we have two images the second one will be very similar to the first, with only a small movement of some parts of the image. Like we do for space, a mathematical relationship can be calculated for the similar areas from one image to the next. The first image can be used as a reference point and for the second we transmit only the vector that explains which pixels have moved and by how much. This greatly reduces the data that needs to be transmitted.

The above techniques are used in single view transmission, with free-viewpoint technology we have a new dimension. We also need to include the space between cameras shooting the same scene. Since the scene is the same there is a lot of similarity between the videos of each camera. The main difference is that of angle and the problem that some objects might be visible from one camera and not from another. Keeping this in mind, a mathematical equation can be constructed that explains which parts of the scene are the same and which are new. A single camera’s video is used as a reference point while its neighbouring cameras only transmit the ‘extra’ information. The other camera can compress their content drastically. In this way the current standard can be extended to free-viewpoint TV.

Compressing free-viewpoint transmissions is complex work. Its complexity is a drawback, mobile devices simply aren’t fast enough to run computer power intensive algorithms. Our research focuses on reducing the complexity of the algorithms. We modify them so that they are faster to run, need less computing power, and still keep the same quality of video, or with minimal losses.

“The road ahead is steep and a lot of work is needed to bring this technology to homes”

We have also explored new ways of reconstructing high quality 3D views in minimum time, using graphical processing units (GPUs). GPUs are commonly used by high-end video games. Video must be reconstructed with a speed of at least 25 pictures per second. This speed must be maintained if we want to build a smooth continuous video in between two real camera positions (picture). A single computer process cannot handle algrothims that can achieve this feat; instead parallel processing (multiple simultaneous computations) is essential. To remove the strain off a main processing unit in a computer processing can be offloaded to a GPU. Algorithms need to be built that use these alternative processing powers. Ours show that we can obtain the necessary speeds to process free-viewpoint 3D video even on mobile devices.

Since free-viewpoint takes up a large bandwidth on networks, we researched whether these systems can feasibly handle so much data. We considered the use of next generation mobile telephony networks (4G). Naturally they offer more channel space, we wanted to see how many users they can handle at different screen resolutions. We showed that the technology can be used only using a limited number of cameras. The number of users is directly related to the resolution used, with a lower resolution needing less data and allowing more views or users. This research came up with design solutions for the network’s architecture and broadcasting techniques needed to minimise delays.

The road ahead is steep and a lot of work is needed to bring this technology to homes. My vision is that in the near future we will be consuming 3D content and free-viewpoint technology in a seamless and immersive way in our homes and mobile devices. So for now sit back and imagine what watching an opera or football match on TV would look like in a few years’ time.