by Alexander Hili

Gymnastic Polymers

by Alexander Hili

by Alexander Hili

Fillet is the best cut. Trust me. It’s worth the money.

Use molecular gastronomy to take advantage of decades of researching how meat changes with heat. Science indicates that the best cooking temperature is around 55˚C, and definitely not above 60˚C. At a high temperature, myofibrillar (hold 80% of water) and collagen (hold beef together) proteins shrink. Shrinking leads to water loss. In the water lies the flavour.

To cook the fillet use a technique called sous vide. It involves vacuum wrapping the beef and keeping it at 55˚C in a water bath for 24–72 hours. This breaks down the proteins without over heating. The beef becomes tender but retains flavour and juiciness.

Take the beef out. It will look unpalatable. Quickly fry it on high heat on both sides to brown it. The high heat triggers the reduction of proteins or the Maillard reaction. Enjoy with a glass of your favourite red.

Roderick Micallef has a long family history within the construction industry. He coupled this passion with a fascination with science when reading for an undergraduate degree in Biology and Chemistry (University of Malta). To satisfy both loves, he studied the chemical makeup and physical characteristics of Malta’s Globigerina Limestone.

Micallef (supervised by Dr Daniel Vella and Prof. Alfred Vella) evaluated how fire or heat chemically change limestone. Stone heated between 150˚C and 450˚C developed a red colour. Yellow coloured iron (III) minerals such as goethite (FeOOH) had been dehydrated to red coloured hematite (Fe2O3). If the stone was heated above 450˚C it calcified leading to a white colour. This colour change can help a forensic fire investigator quickly figure out the temperature a stone was exposed to in a fire — an essential clue on the fire’s nature.

While conducting this research, Micallef came across an Italian study that had concluded that different strains of heterotrophic bacteria can consolidate concrete and stone. Locally, Dr Gabrielle Zammit had shown that this process was happening on ancient limestone surfaces (Zammit et al., 2011). These bacteria have the potential to act as bio-consolidants and Micallef wanted to study if they could be used to reinforce the natural properties of local limestone and protect against weathering.

Such a study is crucial in a day and age where the impact of man on our natural environment is becoming central to scientific research. The routine application of conventional chemical consolidants to stone poses an environmental threat through the release of both soluble salt by-products and peeled shallow hard crusts caused by incomplete binding of stone particles. Natural bio-consolidation could prove to be an efficient solution for local application and is especially important since Globigerina Limestone is our only natural resource.

This research is part of an Master of Science in Cross-Disciplinary Science at the Faculty of Science of the University of Malta, supervised by microbiologist Dr Gabrielle Zammit, and chemists Dr Daniel Vella and Prof. Emmanuel Sinagra. The research project is funded by the Master it! Scholarship scheme, which is part-funded by the EU’s European Social Fund under Operational Programme II — Cohesion Policy 2007–2013.

Research — that would be the simplest way to answer the question above. Really and truly this answer would only apply to a small niche of individuals throughout the world.

Research — that would be the simplest way to answer the question above. Really and truly this answer would only apply to a small niche of individuals throughout the world.

It is a big challenge to explain to people what you do with a science university degree. The questions “Int għal tabib?” (Are you aiming to become a doctor?) or “Issa x’issir, spiżjar?” (Will you become a pharmacist?) are usually the responses. The thing is, people have trouble understanding non-vocational careers — if you are not becoming a lawyer, an accountant, a doctor or a priest, the concept of your job prospects is quite difficult to grasp for the average Joe.

In truth, it is not really 100% Joe Public’s fault — research is a tough concept to come to terms with, ask a good portion of Ph.D. students about that. There seems to be a lack of clarity in people’s minds about what goes on behind the scenes. If you boil it down, everything we use in our daily lives from mobile phones to hand warmers are the spoils of research — a laborious process with the ultimate goal of increasing our knowledge and, consequently, the utility of our surroundings.

“People need to stop feeling threatened by big words and abstract concepts they cannot grasp”

So, then, why exactly is it such an alien concept? I think the reason is that research is very slow and sometimes very abstract. Gone are the days when a simple experiment meant a novel, ground-breaking discovery — research nowadays delves into highly advanced topics, building on past knowledge to add a little bit more. I have complained about this to many of my colleagues on several occasions — and it is more complicated when you are studying something like Chemistry and Physics, or worse, Maths and Statistics — people just do not get it!

Research is exciting. The challenge is how to infect others with this enthusiasm without coming off as someone without a hint of a social life (just ask my girlfriend). It is nice to see initiatives like the RIDT and Think magazine trying hard to get the message out there that research is a continuous process with often few short-term gains. It can be surprising when you realise how much is really going on at our University, despite its size and budget.

To befriend the general public researchers still need to do more. The first step is relaying the message in the simplest terms possible — people need to stop feeling threatened by big words and abstract concepts they cannot grasp. There also needs to be increased opportunities for interaction with research — Science in the City is the perfect example. Finally, I think MCST needs to start playing a larger role — it must work closer to University and take a more coordinated role at a national level. Only then can we begin to explain what us researchers do.

Producing Food products, pharmaceuticals, and fine chemicals leads to hazardous waste and poses environmental and health risks. For over 20 years, green chemists have been attempting to transform the chemical industries by designing inherently safer and cleaner processes. Continue reading

In 1982, 4 years before I was born, the world fell in love with Spielberg’s E.T. the Extra-Terrestrial. Fifteen years later, the movie Contact, an adaptation of Carl Sagan’s novel, hit the big screen. Although at the time I was too young to appreciate the scientific, political, and religious themes I was captivated and it fired my thoughts. I questioned whether we are alone in this vast space. What would happen if E.T. does call? Are we even listening? If so, how? And, is it all a waste of time and precious money? Instead of deflating me, these questions inspired me to start a journey that led me to my collaboration with SETI, the Search for Extra Terrestrial Intelligence. I participated in ongoing efforts to try and find intelligent civilisations on other worlds.

The debate on whether we are alone started ages ago. It was first debated in Thales, Ancient Greece. Only recently has advanced technology allowed us to try and open up communication channels with any existing advanced extraterrestrial civilisations. If we do not try we will never answer this question.

For the past fifty years we have been scanning the skies using large radio telescopes and listening for signals which cannot be generated naturally. The main assumption is that any advanced civilisation will follow a similar technological path as we did. For example, they will stumble upon radio communication as one of the first wireless technologies.

SETI searches are usually in the radio band. Large telescopes continuously scan and monitor vast patches of the sky. Radio emissions from natural sources are generally broadband, encompassing a vast stretch of the electromagnetic spectrum — waves from visible light to microwaves and X-rays — whilst virtually all human radio communication has a very narrow bandwidth, making it easy to distinguish between natural and artificial signals. Most SETI searches therefore focus on searching for narrow band signals of extraterrestrial origin.

Narrow bands are locked down by analysing a telescope’s observing band — the frequency range it can detect. This frequency range is broken down into millions or billions of narrow frequency channels. Every channel is searched at the same time. SETI searches for sharp peaks in these small channels. This requires a large amount of computational resources, such as supercomputing clusters, specialised hardware systems, or through millions of desktop computers. The infamous SETI@home screen-saver extracted computer power from desktops signed up to the programme, which started as the millennium turned.

E.T. civilisations might also transmit signals in powerful broadband pulses. This means that SETI could search for wider signal frequencies. However, they are more difficult to tease apart from natural emissions, so they require more thorough analysis. The problem is that as broadband signals — natural or otherwise — travel through interstellar space they get dispersed, resulting in higher frequencies arriving at the telescope before lower ones, even though they both were emitted at the same time. The amount of dispersion, the dispersion gradient, depends on the distance between the transmitter and receiver. The signal can only be searched after this effect is accounted for by a process called dedispersion. To detect E.T. signs, thousands of gradients have to be processed to try out all possible distances. This process is nearly identical to that used to search for pulsars, which are very dense, rapidly rotating stars emitting a highly energetic beam at its magnetic poles. Pulsars appear like lighthouses on telescopes, with a regular pulse across the entire observation band.

For the past four years I have been developing a specialised system which can perform all this processing in real-time, meaning that any interesting signals will be detected immediately. Researchers now do not need to wait for vast computers to process the data. This reduces the amount of disk space needed to store it all. It also allows observations to be made instantaneously, hence reducing the risk of losing any non-periodic, short duration signals. To tackle the large computational requirements I used Graphics Processing Units (GPUs) — typically unleashed to work on video game graphic simulations — because a single device can perform tasks of at least 10 laptops. This system can be used to study pulsars, search for big explosions across the universe, search for gravitational waves, and for stalking E.T..

Hunting for planets orbiting other stars, known as exoplanets, has recently become a major scientific endeavour. Over 3,500 planet-candidates were found by the Kepler telescope that circles our planet, about 961 are confirmed. Finding so many planets is now leading scientists to believe that the galaxy is chock-full of them. The current estimate: 100 billion in our galaxy, with at least one planet per star. For us E.T. stalkers, this is music to our ears.

Life could be considered inevitable. However, not all planets can harbour life, or at least life as we know it. Humans need liquid water and a protective atmosphere, amongst other things. Life-supporting planets need to be approximately Earth-sized and orbit within its parent star’s habitable zone. This Goldilocks zone is not too far away from the sun, freezing the planet, or too close to it, frying it. These exoplanets are targeted by SETI searches, which perform long duration observations of exoplanets similar to Earth.

“The big question is: where do we look for E.T.? I would prefer rephrasing to: at which frequency do we listen for E.T.?”

By focusing on these planets, SETI is gambling. They are missing huge portions of the sky to focus on areas that could yield empty blanks. SETI could instead perform wide-field surveys which search large chunks of the sky for any interesting signals. Recent development in radio telescope technology allows for the instantaneous observation of the entire sky, making 24/7 SETI monitoring systems possible. Wide-field surveys lack the resolution needed to figure out where a signal would come from, so follow-up observations are required. Anyhow, a one-off signal would never be convincing.

For radio SETI searches, the big question is: where do we look for E.T.? I would prefer rephrasing to: at which frequency do we listen for E.T.? Imagine being stuck in traffic and you are searching for a good radio station without having a specific one in mind. Now imagine having trillions of channels to choose from and only one having good reception. One would probably give up, or go insane. Narrowing down the range of frequencies at which to search is one of the biggest challenges for SETI researchers.

The Universe is full of background noise from naturally occurring phenomena, much like the hiss between radio stations. Searching for artificial signals is like looking for a drop of oil in the Pacific Ocean. Fortunately, there exists a ‘window’ in the radio spectrum with a sharp noise drop, affectionately called the ‘water hole’. SETI researchers search here, reasoning that E.T. would know about this and deliberately broadcast there. Obviously, this is just guesswork and some searches use a much wider frequency range.

Two years ago we decided to perform a SETI survey. Using the Green Bank Telescope in West Virginia (USA), the world’s largest fully steerable radio dish, we scanned the same area the Kepler telescope was observing whilst searching for exoplanets. This area was partitioned into about 90 chunks, each of which was observed for some time. In these areas, we also targeted 86 star systems with Earth-sized planets. We then processed around 3,000 DVDs worth of data to try and find signs of intelligent life. We developed the system ourselves at the University of Malta, but we came out empty handed.

Should we give up? Is it the right investment in energy and resources? These questions have plagued SETI from the start. Till now there is no sign of E.T., but we have made some amazing discoveries while trying to find out.

Radio waves were discovered and entered into mainstream use in the late 19th century. We would be invisible to other civilisations unless they are up to 100 light years away. Light (such as radio) travels just under 9.5 trillion kilometres per year. Signals from Earth have only travelled 100 light years, broadcasts would take 75,000 years to reach the other side of our galaxy. To compound the problem, technology advances might soon make most radio signals obsolete. Taking our own example, aliens would have a very small time window to detect earthlings. The same reasoning works the other way, E.T. might be using technologies which are too advanced for us to detect. As the author Arthur C. Clarke stated, ‘any sufficiently advanced technology is indistinguishable from magic’.

At the end of the day, it is all a probability game, and it is a tough one to play. Frank Drake and Carl Sagan both tried. They came up with a number of factors that influence the chance of two civilisations communicating. One is that we live in a very old universe, over 13 billion years old, and for communication between civilisations their time windows need to overlap. Another factor is, if we try to detect other technological signatures they might also be obsolete for advanced alien life. Add to these parts, the assumed number of planets in the Universe and the probability of an intelligent species evolving. For each factor, several estimates have been calculated. New astrophysical, planetary, and biological discoveries keep fiddling with the numbers that range from pessimistic to a universe teeming with life.

The problem with a life-bloated galaxy is that we have not found it. Aliens have not contacted us, despite what conspiracy theorists say. There is a fatalistic opinion that intelligent life is destined to destroy itself, while a simpler solution could be that we are just too damned far apart. The Universe is a massive place. Some human tribes have only been discovered in the last century, and by SETI standards they have been living next door the whole time. The Earth is a grain of sand in the cosmic ocean, and we have not even fully explored it yet.

“Signals from Earth have only travelled 100 light years, broadcasts would take 75,000 years to reach the other side of our galaxy”

Still, the lack of alien chatter is troubling. Theorists have come up with countless ideas to explain the lack of evidence for intelligent alien existence. The only way to solve the problem is to keep searching with an open mind. Future radio telescopes, such as the Square Kilometre Array (SKA), will allow us to scan the entire sky continuously. They require advanced systems to tackle the data deluge. I am part of a team working on the SKA and I will do my best to make this array possible. We will be stalking E.T. using our most advanced cameras, and hopefully we will catch him on tape.

[ct_divider]

Exoplanets Galore

My phone rang, waking me up in the middle of the night. It is 2 a.m., and I (Dr Gianluca Valentino) am driving from the sleepy French village where I live at the foot of the snow-capped Jura mountains to the CERN Control Centre. As groggy as I feel, I am trembling with excitement at finally putting months of my work to the test.

The operators on night shift greet me as I come in through the sliding door. These are the men and women who keep the €8 billion Large Hadron Collider (LHC) running smoothly. The LHC produces 600 million particle collisions per second to allow physicists to examine the fundamentals of the universe. Their most recent discovery is the Higgs boson, a fundamental particle. In 2012, this finding appears to have confirmed the Higgs field theory, which describes how other particles have mass. It helps explain the universe around us.

The empty bottles of champagne on the shelves of the CERN Control Centre are a testimony to the work of thousands of physicists, engineers, and computer scientists. The LHC has now busted record after record rising to stratospheric fame.

The LHC is an engineering marvel. A huge circular tunnel 100 m underground and 27 km in circumference. It straddles the Franco-Swiss border and is testimony to the benefit of 50 years of non-military research at CERN, the European Organization for Nuclear Research. CERN is also the birthplace of the World Wide Web.

The LHC works by colliding particles together. In this way physicists can peer into the inner workings of atoms. Two counter-rotating hadron (proton or heavy-ion) beams are accelerated to approach the speed of light using a combination of magnetic and electric fields. A hadron is a particle smaller than atoms, and is made up of several types of quarks, which are fundamental particles (there is nothing smaller than them — for now). The beams circulate at an energy of 7 TeV, which is similar to a French TGV train travelling at 150 km per hour.

For the magnets to work at maximum strength, they need to operate in super-conducting mode. This mode needs the collider to be cooled to -271°C using liquid helium, making it the coldest place in the universe. It is also the hottest place in the universe. Collisions between lead ions have reached temperatures of over 5 trillion °C. Not even supernovae pack this punch.

The two separate beams are brought together and collided at four points where the physics detectors are located. A detector works by gathering all the information generated by the collisions which generate new sub-atomic particles. The detectors track their speed and measure the energy and charge. ‘Gluon fusion’ — when two gluons combine (a type of boson or particle that carries a force) — is the most likely mechanism for Higgs boson production at the LHC.

My role in this huge experiment is to calibrate the LHC’s brakes. Consider a simple analogy. A bike’s brakes need to be positioned at

the right distance from the circulating wheel, and are designed to halt a bike in its tracks from a speed of around 70 km/hr. Too far apart, and when the brakes are applied, the bike won’t stop. Too close together, and the bike won’t even move. In the LHC, the particles travel at nearly 300,000 km/s.

Equipment called collimators act as the LHC’s brakes. The LHC is equipped with 86 of them, 43 per beam. They passively intercept particles travelling at the speed of light, which over time drift from the centre outwards. The machine is unprotected if the collimators are placed too far away from the beam. The beam’s energy, equivalent of 80 kg of TNT, would eventually drill a hole needing months or years to repair. If the collimators are too close to the beam they sweep up too many particles, reducing the beam’s particle population and its lifetime.

The LHC has four different types of collimators, which clean the particles over multiple stages in the space of a few hundred metres. These collimators also protect the expensive physics detectors from damage if a beam were to hit them directly. If the detectors were hit the LHC would grind to a halt.

What does it take to calibrate these brakes? Each brake or collimator is made up of two metre-long blocks of carbon composite or tungsten, known as ‘jaws’. The jaws should be positioned symmetrically on either side of the beam, and opened to gaps as small as 3 mm to let the beam through. They can be moved in 5 µm increments— that is 20 times less than the width of a typical human hair. The precision is necessary but makes the procedure very tedious.

The beam’s position and size at each collimator are initially unknown. They are determined through a process called beam-based alignment. During alignment, each jaw is moved in steps towards the beam, until it just scrapes the edge. Equipment near the collimator registers the amount of particles they are mopping up. Then, the beam position is calculated as the average of the aligned jaw positions on either side, while the beam size is determined from the jaw gap.

The problem is that there are 86 collimators. Each one needs to be calibrated making the process painfully slow. To calibrate the jaws manually takes several days, totaling 30 hours of beam time. To top it all, the alignment has to be repeated at various stages of the machine cycle, as the beams shrink with increasing energy, and imperfections in the magnetic fields can lead to changes in the beam’s path. This wasted time costs millions and makes the LHC run slower.

Previously, one had to click using a software application for each jaw movement towards the beam. With a step size of 5 µm and a total potential distance of 10 mm, that is 2000 clicks per collimator jaw! Extreme precision is required when moving the collimator jaws. If the jaw moves too much into the beam, the particle loss rate will exceed a certain threshold, and the beam is automatically extracted from the LHC. A few hours are wasted until the operators get the machine back and the alignment procedure is restarted.

Over the course of my Ph.D., I automated the alignment, speeding it up by developing several algorithms — computer programs that carry out a specific task. The LHC now runs on a feedback loop that automatically moves the jaws into the correct place without scraping away too much beam. The feedback loop enables many collimators to be moved simultaneously, instead of one at a time. A pattern recognition algorithm determines whether the characteristic signal observed when a jaw touches the beam is present or not. This automates what was previously a manual, visual check performed by the operator.

The sun’s rays begin to filter through the CERN Control Centre, and the Jura mountains are resplendent in their morning glory. The procedure is complete: all collimators are aligned in just under 4 hours, the fastest time ever achieved.

In early 2013, the LHC was shut down for a couple of years for important upgrades. Before then my algorithms helped save hundreds of hours; since the LHC costs €150,000 per hour to run, millions of euros were also saved. This software was part of the puzzle to provide more time for the LHC’s physics programme and is now here to stay.

The morning shift crew comes in. The change of guard is performed to keep the machine running 24 hours a day, 7 days a week, while I head home to catch up on lost sleep.

Over summer Dr Edward Duca visited the beautiful Island of Gozo, meeting Prof. Ray Ellul and his team based in Xewkija and the Giordan Lighthouse. Gozo is a tourist hotspot because of its beautiful landscapes, churches, and natural beauty. These same reasons attracted Ellul to obtain baseline readings of air pollutants; human effects should be minimal. Their equipment told them a different story.

They’re very big, anything from 10,000 tonnes to 100,000 tonnes,’ atmospheric physicist Prof. Ray Ellul is telling me about the 30,000 large ships his team observed passing between Malta and Sicily over a year. This shipping superhighway sees one third of the world’s traffic pass by carrying goods from Asia to Europe and back.

The problem could be massive. ‘A typical 50,000 tonner will have an engine equivalent to 85 MW,’ Malta’s two electricity plants churn out nearly 600 MW. You only need a few of these to rival the Islands’ power stations.

Ellul continues, ‘this is far far worse. We are right in the middle of it and with winds from the northwest we get the benefit of everything.’ Northwest winds blow 70% of the time over Malta and Gozo, which means that around two thirds of the time the pollutants streaming out of these ships are travelling over Malta. Even in Gozo, where traffic is less intense, air quality is being affected.

“Ships currently use heavy fuel oil with 3.5% sulfur; this needs to go down to at least 0.5%. The problem is that it doubles cost”

Malta is dependent on shipping. Malta’s Flag has the largest registered tonnage of ships in Europe; shipping brings in millions for Malta. We cannot afford to divert 30,000 ships to another sea. Yet Malta is part of the EU and our politicians could ‘go to Brussels with the data and say we need to ensure that shipping switches to cleaner fuels when passing through the Mediterranean.’ Politicians would also need to go to the Arab League to strike a deal with North Africa. Ships currently use heavy fuel oil with 3.5% sulfur; this needs to go down to at least 0.5%. The problem is that it doubles the costs. Malta’s battle at home and abroad won’t be easy, but the Baltic Sea has already taken these measures.

The research station in Gozo is a full-fledged Global Atmospheric Watch station with a team of five behind it. Now it can monitor a whole swathe of pollutants but its beginning was much more humble, built on the efforts of Ellul, who was drawn into studying the atmosphere in the 80s when he shifted his career from chemistry to physics.

In the early 90s the late rector, Rev. Prof. Peter Serracino Inglott, wanted the University to start building some form of research projects. ‘At that time, we knew absolutely nothing about what was wrong with Maltese air and Mediterranean pollution,’ explained Ellul. Building a fully fledged monitoring station seemed to be the key, so Ellul sent ‘handwritten letters with postage stamps’ to the Max Planck Institute in Mainz. Nobel prize winner Paul J. Crutzen wrote back inviting him to spend a year’s sabbatical in Germany, but their help didn’t stop there. ‘He helped us set-up the first measuring station, [to analyse the pollutants] ozone, then sulphur dioxide, then carbon monoxide. That’s the system we had in 1996 — […] 2 or 3 instruments.’

They lived off German generosity until 2008 when Malta started tapping into EU money. After some ERDF money and an Italy-Malta project on Etna called VAMOS SEGURO (see Etna, THINK issue 06, pg. 40), Ellul now manages a team of five. In homage to his early German supporters he has structured the research team around a Max Planck model — ‘one of the best systems in the World for science’.

Getting data about ships is not easy. ‘It is very sensitive information and there is a lot of secrecy behind it,’ explains Ing. Francelle Azzopardi, a Ph.D. student in Ellul’s team. It is also very expensive. Lloyd’s is the World’s ship registry that tracks all ships, knowing their size, location, engine type, fuel used — basically a researcher’s dream. However, they charge tens of thousands. Ellul took the decision that they gather all the data themselves.

After 2004, all international ships above 300 gross tons need to have a tracking device. Automatically, these ships are traced around the world and anyone can have a peek on www.marinetraffic.com (just check the traffic around Malta). Every half hour the team’s administrator Miriam Azzopardi saves the data then integrates it into the Gozitan database. This answers the questions: where was this ship? which ship was it? how big is it? Easy.

“Ship emission expert James Corbett calculates that worldwide around around 60,000 people die every year due to ship emissions”

If only! The problem is that the researchers also need to know fuel type, engine size, pollution reduction measures, and so on. Then they would know which ship is where, how many pollutants are being emitted, and how many are reaching Malta and Gozo. To get over this hurdle, they contacted Transport Malta (more than once) to ask for the information they needed. ‘About 50% of the ships passing there [by us or Suez are] Malta registered,’ explained Ellul. With this information in hand they could put two and two together. They could create a model for ship emissions close to the Islands and use the model to get the bigger picture.

Enter their final problem: how do you model it? Enter the Finns. Ing. Francelle Azzopardi travelled to the Finnish Meterological Institute. They had already modeled the Baltic Sea, now they wanted access to the Maltese data, in return the Maltese team wanted access to their model called STEAM.

STEAM is a very advanced model. It gathers all the ships’ properties like engine power, fuel type, and ship size. This is combined with its operating environment including speed, friction, wave action, and so on. STEAM then spits out where the team should be seeing the highest pollution indicators. Malta was surrounded.

Apart from the model, the team have seen a clear link between ships and pollution. At the Giordan lighthouse they can measure a whole host of pollutants sulphur dioxide, various nitrogen oxides, particulate matter, black and brown carbon levels, ozone, radioactivity levels, heavy metals, Persistent Organic Pollutants (POPs) and more. When the wind blows from the Northwest, they regularly show peaks of sulfur dioxide, nitrogen oxides, carbon dioxide, carbon monoxide, hydrocarbons which are all indicative of fossil fuel burning either from ships or Sicilian industry. They also picked up relatively high levels of heavy metals especially Vanadium, a heavy metal pollutant. Such metals are more common in heavy fuel oil used by ships.

Alexander Smyth is the team’s research officer who spends three months in Paris every year analysing filters that capture pollutants from the atmosphere. Two different filter types are placed in the Giordan Lighthouse. One filter for particles smaller than 2.5 micrometers and another filter for particles around 2 to 10 microns. ‘With the 2.5 filter we can see anthropogenic emissions or ship emissions because they tend to be the smaller particles. The filters are exposed for three to four days, and then they need to be stored in the fridge. Afterwards, I take them to Paris and conduct an array of analyses,’ continued Alexander. The most worrying pollutant he saw was Vanadium.

Vanadium is a toxic metal. When inhaled, ‘it can penetrate to the alveoli of the lungs and cause cancer, a worst case scenario,’ outlined Alexander. It can also cause respiratory and developmental problems — none are good news. The only good news is that ‘they are in very small amounts’. Quantity is very important for toxicity, and they are seeing nanograms per cubic metre, a couple of orders of magnitude more are needed to cause serious problems. No huge alarm bells need to be raised, although Vanadium does stick around in bones and these effects still need more studies.

Vanadium seems to be coming from both Malta and shipping traffic. ‘The highest peaks of vanadium are from the south [of Malta, but the largest number of times I detected came] from the northwest, [from ships],’ said Smyth. ‘There is a larger influence from ships compared to local pollution at the Giordan lighthouse.’

Vanadium is not the only pollutant that could be affecting the health of Maltese citizens. Smyth also saw lots of different Persistent Organic Pollutants (POPs). At low concentrations these compounds can affect immunity leading to more disease, at higher concentrations they can lead to cancer. The local researchers still need to figure out their effect on Malta’s health. Francelle Azzopardi also saw peaks of sulfur dioxide and nitrogen oxides. No surprise here as shipping is thought to cause up to a third of the World’s nitrogen oxides and a tenth of the sulfur dioxide pollution.

Inhaling high levels of sulfur dioxide leads to many problems. It is associated with respiratory disease, preterm births, and at very high levels, death. It can affect plants and other animals. Nitrogen oxides also cause respiratory disease, but can also cause headaches, reduce appetite, and worsen heart disease leading to death. These are pollutants that we want to keep as low as possible.

Ship emission expert James Corbett (University of Delaware) calculates that worldwide around 60,000 people die every year due to ship emissions. Most deaths come from the coastlines of Europe, East Asia, and South Asia. Shipping causes around 4% of climate change emissions. This is set to double by 2050. In major ports, shipping can be the main cause of air pollution on land.

Another unexpected pollutant was ozone, normally formed when oxygen reacts with light. Yet the Giordan lighthouse was not the first to start measuring this gas. It all started with the Jesuits, scholarly catholic monks.

A lot of time is needed to see changes in our atmosphere. Researchers need to gather data over years. To speed up the process, Ellul was hunting around Malta and Gozo for ancient meteorological data about the Islands’ past atmosphere.

He was tipped off that there were still some records at a seminary in Gozo. ‘We expected to find just meteorological data and instead we also found ozone data as well. It was a complete surprise and a stroke of very good luck. We were able to find out what happened to ozone levels in the Mediterranean over the last hundred years.’

Jesuit monks meticulously measured ozone levels from 1884 to 1900. They analysed them seeing that the concentration of ozone was a mere 8 to 12 parts per billion by volume, ppbv. Ellul compared these to a 10-year study he conducted from 1997 to 2006. ‘We measure around 50ppb on average throughout the year,’ which is nearly 5 times more over a mere 100 years.

The situation is quite bad for Malta. In the past, the minimum was in summer and the maximum in winter and spring. Now, this has reversed with spring and summer having the highest ozone levels because of the reactions between hydrocarbons and nitrogen oxides. These come from cars, industry, and ships.

Over the Eastern Mediterranean ozone levels have gradually decreased. Over Malta, in the Central Mediterranean, they remained the same. Ellul thinks this could be because of an anticyclone over the central Mediterranean bringing pollutants from Europe over Malta and Gozo. The levels of ozone in Malta and Gozo are the highest in Europe, and it could be mostly Europe’s fault. Our excessive traffic doesn’t help.

Ozone can be quite a mean pollutant. While stratospheric ozone blocks out harmful UV rays, low-level ozone can directly damage our health or react with other pollutants to create toxic smog. It’s been known to start harming humans at levels greater than 50 ppbv. It inflames airways causing difficulty breathing, coughing and great discomfort. Some research has linked it to heart attacks — a pollutant not to be taken lightly.

Over those 10 years Ellul and his team saw 20 episodes in summer where ozone levels exceeded 90 ppbv. Some were during the night, unlikely to be of local origin but due to transport phenomena in the central Mediterranean and shipping. Ellul does nod towards the possibility of air recirculation from Malta. The atmosphere is a complicated creature.

Plants also suffer from ozone. Above 40 ppbv yield from fields decreases. Gozo is definitely being affected; we could be producing more.

Ellul and his team have found a potentially big contributor to the Islands’ pollution. This would be over and above our obvious traffic problem. Yet Ellul admits that ‘there is no particular trend, it’s too short a time span. What it tells us is that what we think is a clean atmosphere is not really a clean atmosphere at all. The levels are significant.’ Azzopardi honestly says ‘I can [only] give you an idea of what is happening’.

The team needs to study the problem for longer. It needs some statistics. Clearly they see a link between ships passing by Malta and peaks in pollution levels, but the Islands need to know if shipping pollution levels beat industry, traffic, or Saharan dust. What is ships’ contribution to Malta’s health problems?

When the team knows the extent of pollution, they can see whether they go above European standards. Ozone already does, and likely to be due to pollution from the European continent. If they can extend it to a whole host of other pollutants that skyrocket above European standards due to ship traffic, then ‘our politicians,’ says Ellul, can go to Brussels to enforce new legislation. That could control Mediterranean shipping traffic to clean up our air. At least it would solve one significant problem that Malta cannot solve on its own.

The main problem is economic. A ship can be made greener by reducing its sulfur fuel content. Low sulfur fuels are double the price of the bunker fuel they currently use. New legislation would need enforcement, which is costly. Ships could also be upgraded, again at a price. Passing these laws is not going to be easy.

Ships have been a pollution black hole for a while. The fuels ships burn contains 3,000 times more sulfur than cars are allowed to burn. Quite unfair. Going back to Corbett’s figures estimating European deaths at 27,000, the current rise in shipping pollution could end up killing hundreds of thousands if not millions before new legislation is enforced. Now that would be truly unfair.

Computational chemistry is a powerful interdisciplinary field where traditional chemistry experiments are replaced by computer simulations. They make use of the underlying physics to calculate chemical or material properties. The field is evolving as fast as the increase in computational power. The great shift towards computational experiments in the field is not surprising since they may reduce research costs by up to 90% — a welcome statistic during this financial crisis.

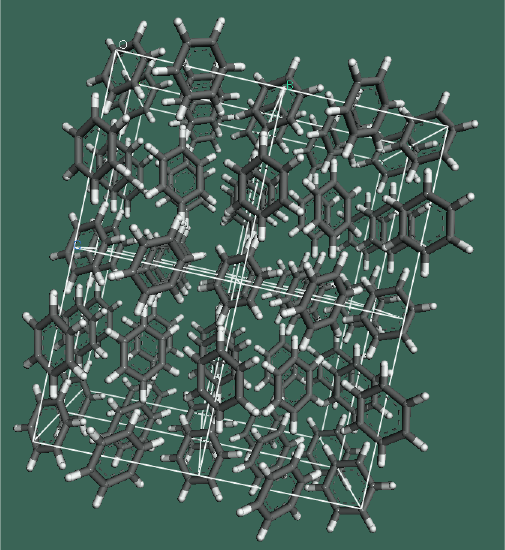

Keith M. Azzopardi (supervised by Dr Daphne Attard) used two distinct computational techniques to uncover the structure of a carcinogenic chemical called solid state benzene. He also looked into its mechanical properties, especially its auxetic capability, materials that become thicker when stretched. By studying benzene, Azzopardi is testing the approach to see if it can work. Many natural products incorporate the benzene ring, even though they are not toxic.

The crystalline structures of solid state benzene were reproduced using computer modelling. The first technique used the ab initio method, that uses the actual physical equations of each atom involved. This approach is intense for both the computer and the researcher. It showed that four of the seven phases of benzene could be auxetic.

The second less intensive technique is known as molecular mechanics. To simplify matters it assumes that atoms are made of balls and the bonds in between sticks. It makes the process much faster but may be unreliable on its own due to some major assumptions. For modelling benzene, molecular mechanics was insufficient.

Taken together, the results show that molecular mechanics could be a useful, quick starting point, which needs further improvement through the ab intio method.

This research was performed as part of a Masters of Science in Metamaterials at the Faculty of Science. It is partially funded by the Strategic Educational Pathways Scholarship (Malta). This Scholarship is part-financed by the European Union – European Social Fund (ESF) under Operational Programme II –Cohesion Policy 2007-2013, “Empowering People for More Jobs and a Better Quality Of Life”. It was carried out using computational facilities (ALBERT, the University’s supercomputer) procured through the European Regional Development Fund, Project ERDF-080 ‘A Supercomputing Laboratory for the University of Malta’.

Laptops and mobiles are smaller, thinner, and more powerful than ever. The drawback is heat, since computing power comes hand in hand with temperature. Macs have been known to melt down, catch fire and fry eggs — PCs can be even more entertaining. David Grech (supervised by Prof. Emmanuel Sinagra and Dr Ing. Stephen Abela) has now produced diamond–metal matrix composites that can remove waste heat efficiently.

Diamonds are not only beautiful but have some remarkable properties. They are very hard, can withstand extreme conditions, and even transfer heat energy faster than any metals. This ability makes diamonds ideal as heat sinks and spreaders.

The gems are inflexible making them difficult to mould into the complex shapes demanded by the microelectronics industry. By linking diamonds with other materials, new architectures can be constructed. Grech squashed synthetic diamond and silver powders together at the metal’s melting point. The resulting composite material expanded very slowly when heated. The material could dissipate heat effectively, and was cheaper and simpler to produce than current methods — a step closer to use on microchips.

Grech’s current research is focused on obtaining novel types of interfaces between the diamond powders and the metal matrix. The new materials can improve the performance of heat sinks. New production techniques could help make these materials. By depositing a very thin layer of nickel (200 nanometres thick) on diamond powders using a chemical reaction, the gems would form chemical bonds with the layer while the metal matrix would form metallic bonds. The material would transfer heat quickly and expand very slowly on heating. A heat sink made out of this material would give us a cooler microprocessor and powerful electronics that does not spontaneously catch fire — good news for tech lovers.

This research was performed as part of a Bachelor of Science (Hons) at the Faculty of Science. It is funded by the Malta Council for Science and Technology through the National Research and Innovation Programme (R&I 2010-25 Project DIACOM) and IMA Engineering Services Ltd.