Brain to computer interface (BCI) devices can read a person’s thoughts and turn them into commands to move objects. They can give freedom to people suffering from movement impairments. Rosanne Zerafa (supervised by Tracey Camilleri) developed a system that detects a person’s brain patterns while they are thinking of moving a particular part of their body and translates them into commands to move a cursor. The research has the potential to remove considerable lag between thinking of moving an object and it actually moving.

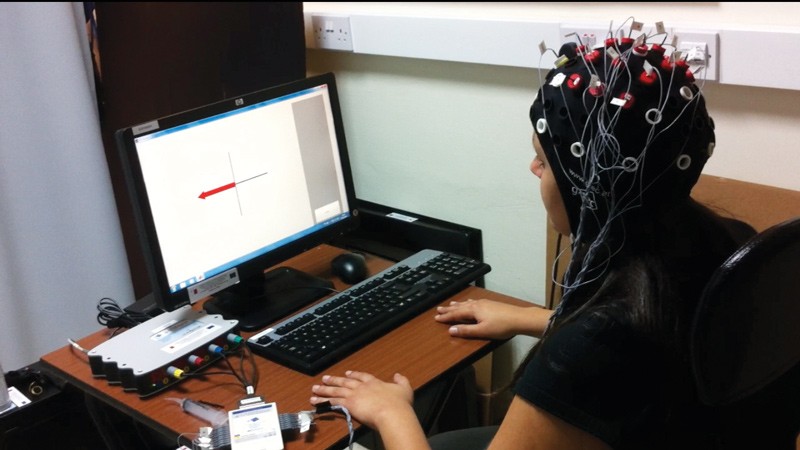

Brain activity can be detected using an electroencephalogram (EEG), which is made up of a cap with electrodes that touch a person’s scalp. The electrical activity captured by the electrodes is then interpreted by a software program to give commands to move a robotic arm, wheelchair, or other assistive device.

Zerafa tested the system on four individuals who were thinking about moving their left or right hand. Different brain patterns from these two tasks could be identified and translated into left or right movement of a cursor on a computer screen.

Taken together, the software could be further developed and tested to improve it for real-world needs such as assisting people with movement difficulties and even gaming.

This research was performed as part of a Bachelor of Engineering (Honours) at the Faculty of Engineering.