Decades-long research into the field of brain to computer interface (BCI) devices seems close to bearing fruit. These devices could provide communication tools to Malta’s growing number of older people and the disabled, while local gaming companies could exploit a new niche entertainment market. Words by Dr Edward Duca.

“Yes, this is the ability to control objects with your thoughts,” confirmed Mr Owen Falzon. Such phrases were previously the domain of witch doctors and madmen; this situation has changed since the 1990s, when the concept started to come under intense scientific study. It is also attracting economic interest from companies ranging from healthcare providers to game developers. The technology offers a new way to navigate the web, play games, and give new ability to the elderly and disabled.

Zammit Lupi courtesy of The Sunday Times

Mr Owen Falzon forms part of a team at the University of Malta led by Professor Ing. Kenneth Camilleri, which studies brain to computer interface (BCI) devices. In other words, brain signals are translated into commands using computer software. Those commands perform specific actions depending on the setup, for example, to move a wheelchair. For BCI devices to understand commands the computer needs to distinguish between different brain patterns. These commands are then identified by the computer by interpreting different brain patterns, such as imagining moving your arm left or right, thinking of a square or circle. When the computer detects that you are imagining moving your arm to the left, it performs the command, such as turning a wheelchair in the same direction.

To identify different brain patterns first the computer detects brain activity. Detection is nothing new, Hans Berger discovered it in 1924 by sticking silver wires under the scalps of his patients and hooking them up to a voltmeter, which let him detect the brain’s electrical activity. Our brains are filled with neurons that carry messages through electrical pulses from one part of the brain to another.

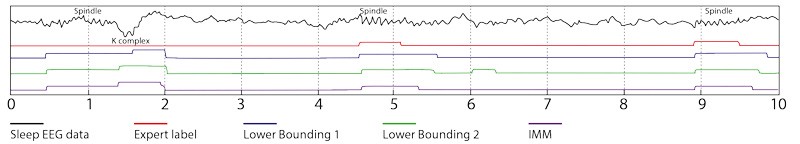

What Owen uses for his research to measure brain activity is a bit more sophisticated and much more comfortable. A scalp cap is attached to the user’s head and electrodes are screwed into the cap which touch the wearer’s skin. Not the most attractive device, but these electrodes detect the electrical activity in the wearer’s brain and display it on a screen, called an electroencephalogram (EEG; sample data pictured). It lets researchers study brain patterns.

The brain speaks to itself

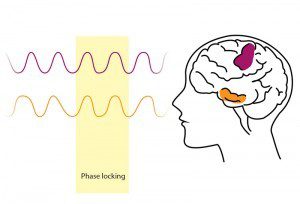

Research into brain to computer interface devices tend to study locations individually, Mr Falzon’s latest work aims to make BCI devices more accurate by “focusing on interactions between different parts of the brain.” The brain is split into different areas specialised for specific functions. The occipital lobe, for example, translates the 2D image on our retina into the sophisticated 3D image we live and work in, while the temporal lobe processes visual information and lets our brains make sense of it all. Both areas of the brain are needed to look and remember.

For human beings to function, multiple areas of the brain need to interact together to complete a single task. Mr Falzon’s research “developed an algorithm [a sequence of instructions that carry out a task, similar to what Google uses to search the web] that can automatically discriminate between different mental tasks.”

The algorithm takes advantage of a phenomenon called phase locking, which occurs when two parts of the brain are synchronized with each other, or interacting. Phase locking involves two sets of neurons in different parts of the brain firing at the same rate as each other and synchronized together like instruments being played in an orchestra to achieve a pleasant harmony. The electrodes can read the electrical signals, while the computer can recognise the phenomenon.

By finding out which parts of the brain are phase locked, Mr Falzon’s algorithm can “identify the best interactions of the brain.” By looking at more than one brain area at the same time, the computer programme should be better at figuring out whether a person is thinking about moving left or right, and can be used to control the direction of a motorised wheelchair.

Unfortunately, this new approach was not very useful for interpreting movements. Although, “it was still a good exercise” said Mr Falzon, since it gave insight into which parts of the brain were communicating with each other, but it did not make the system more accurate. For left and right hand movements, taking simple brainpower is enough. This approach can identify minute differences between brain signals, making it more useful for deciphering higher level thoughts, like speech.

Since at least 2009, reports have leaked from the Pentagon about DARPA’s (the US military’s outlandish research arm featured in the film “men who stare at goats”) research into BCI devices that could let soldiers speak to each other only by thinking about it. The brain generates specific patterns before it vocalises speech, if they manage to turn these patterns into words efficiently: telepathy could be within reach with some simple headgear. Mr Falzon’s algorithm might be applicable to interpreting these signals.

Unlocking new commands

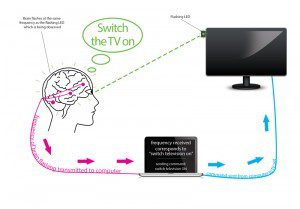

The human brain has a strange property. Parts of the brain respond to a flashing light by oscillating at the same frequency. Like a pendulum swinging and hypnotising a person, a set of LED lights flashing at 20 times per second (20Hz) force the brain to flash in sync. This phenomenon is called SSVEP (Steady State Visually Evoked Potentials), an easy brain pattern for researchers to identify, pick up, and interpret. This approach could allow them to expand the vocabulary of commands that a brain could give a computer (Pictured).

One of the major problems of BCI devices is the reliability of brain pattern interpretation by a computer. Currently, most applications are restricted to one or two commands. In gaming, only one or two degrees of freedom exist, a game of Pac-man is still too complicated. With assistive devices, a wheelchair can simply move left or right. Fine-tuned movements are problematic. More ambitious projects to move cursors around screens with thoughts, ear, and eyebrow movements take hours to train on, which makes current eye-tracking technology superior. SSVEP technology could reverse this trend.

Prof. Camilleri’s team can use this phenomenon to figure out where a person is looking, a powerful ability. So how could it work? Imagine a room with flashing lights, a light flashing at 10 Hz is connected to blinds, 15 Hz curtains, 20 Hz a door leading to the kitchen, 25 Hz for a door leading into the bedroom and 30 Hz switches on the TV. With simple headgear to read a person’s brain pattern, a computer can easily work out if a person is looking at curtains and can thus close them darkening a room. This can be followed by a quick glance at a TV switching it on to watch a favourite series. For someone with mobility problems or wheelchair bound, these advances could be revolutionary to their quality of life.

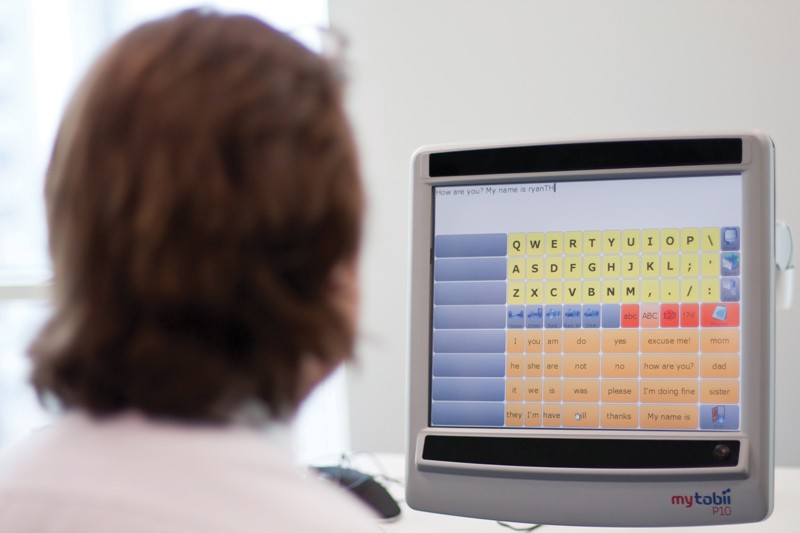

As the example above highlights, SSVEP can be connected to many more commands than other methods. It allows a huge degree of freedom; one of the best examples is its use in keyboards, they are constructed with flashing lights to allow people to type. Mr Falzon emphasised, “it is one of the most reliable methods that exist.” The opportunities this approach could unlock are mind- boggling.

Assistive eye tracking technology at Mada (Qatar Assistive Technology Center) in Doha. Infrared light tracks eye movement allowing users to control a cursor on a computer screen. Using this technology, individuals with impaired movement can use a computer. Stefania Cristina, a student of Prof. Camilleri, is developing a system with a similar function. Her approach uses computer software to track eye movement with a simple and inexpensive webcam. Once fully developed, the technology would be cheaper and available on any computer. Photo by Edward Duca

As might be expected, the spanner in the works is that flashing lights can cause discomfort and trigger serious conditions such as epileptic fits. The solution is a more limited frequency that does not cause these problems and can be adjusted to the user’s comfort zone. Limiting the frequency reduces the number of commands, so to get round this problem researchers can use flashing lights that have different time delays. For example, a time delay of 0.025 seconds could be introduced between two sets of lights flashing at 20 times per second. A computer algorithm would then distinguish between the time delays — a phenomenon called phase differentiation. Prof. Camilleri and his team have developed one of the best algorithms currently available. With the right push, the above scenario could become a reality and help those who need it most.

Making them faster

Mr Falzon’s research is linked to Ms Tracey Camilleri’s, another member of Prof. Kenneth Camilleri’s team working at the Biomedical Engineering Laboratory of the Department of Systems and Control Engineering. Her latest work builds upon the research of Prof. Ing. Simon Fabri (from the same department) who developed an algorithm that detects when a machine changes its mode of operation, and learns the new mode’s characteristics. She adapted this system to interpreting brain signals. The aim was to use less computer processing power to identify brain patterns making it faster than conventional approaches — so fast that patterns can be labeled as they are read with no appreciable delay.

Professor Fabri’s work is applicable to situations where abrupt system behavioral changes occur. For example, airplane sensor-failure detection technology, or for tracking fast-moving objects, like drone aircraft. In her latest work, Ms Camilleri adapted this algorithm to sleep patterns in the brain. When a person is asleep their brain passes through different phases of activity. The brain continuously has some background activity, but it can also give bursts of activity, such as a sleep spindle or K complex. A sleep spindle is thought to inhibit brain processing keeping a person asleep, while a K complex seems important for sleep-based memory.

The algorithm labeled the sleep patterns by continuously applying three models on every data set, known as a Switching Multiple Model approach. Each model was ‘trained’ on either background, a sleep spindle, or a K complex. The model which best matched the data ‘won’. Her programme did this every one hundredth of a second. Fast enough to capture the signals they were interested in, while not too fast that the signal was swamped with noise. After being trained the programme could also learn new additional patterns, so called adaptive learning. The speed and accuracy of her labeling algorithm was just as good as other more computationally intensive (and complete) approaches or an experienced clinician. The benefits of her approach were that it could “label the data on the fly” and in real-time “reducing the time lag”, she explained. This technology could save a clinician valuable time.

Labeling brain sleep pattern data is nothing new. Abnormal patterns have been linked to mental diseases from schizophrenia to epilepsy. In epilepsy, it is a common procedure. Ms Camilleri’s advance was to do it in real-time using little computing power when compared to what’s out there already. Prof. Camilleri envisions how in the future such labeling could be used in other medical applications, such as the early detection of an epileptic fit. In this case, the programmed algorithm could be linked to a wireless device that sends an early warning signal to the epileptic sufferer and loved ones. Even better, “ you could also have a system that may inject the user with a drug when the onset of an epileptic fit is detected.” Obviously, this is still in development.

Before such radical advances, Tracey would “like to put this into an application”; to use more complex data like brain patterns that can move a cursor on a screen. She hopes that “if a person is totally paralysed you can at least give him, or her, a source of communication.” Her algorithms could make this technology work conveniently in real time.

Vision for Malta

Qatar is a small gulf state awash with gas reserves. It has used its wealth to become one of the most influential and progressive of Middle Eastern Arab states. It is the home of Al Jazeera, the Qatar Foundation (a multi-billion educational and research institution), and a leading assistive living centre. The centre is flush with devices that can help disabled people and paraplegics communicate and become productive.

The centre works by sourcing the latest assistive technology and offering the devices at a subsidised rate: 50% off. Malta is not sinking in fossil fuel gold, so we couldn’t afford this approach. So what form of assistive centre would work? Prof. Camilleri’s suggestion needs both government and industry support.

The Centre for Biomedical Cybernetics is a good first step. “The University has invested in and placed itself in a very strong position to grow in this [BCI] area. … As a University, our main priority remains research and transfer of knowledge to students and other people.” He can easily see how other stakeholders outside the University can work together with the Centre to create a similar assistive technology centre. It would “provide short courses and an advisory and technical assistance role to agencies and institutes in Malta.”

The next step would be for research funds to be funneled towards developing the technology. Working together with the elderly and disabled, they could make a site-specific prototype for companies to turn into products. Researchers could also reengineer the product for local needs. Some form of assistive technology centre would help the Health, Elderly and Community Care Department within Government to achieve its goal to treat more people at home and maintain their dignity.

To fulfill these roles this centre would need “to create a practical team of clinicians, engineers and care workers — [that] needs some funding infrastructure on the ground,” continued Prof. Camilleri. In his opinion, “University cannot drive this alone… there is a missing link locally between the research and the commercial aspect. That [structure] which makes [such collaborations] feasible does not exist”, a situation which needs to change.

Problems for Commercialisation

“Imagine a wheelchair user is trying to move left or right with thoughts alone. The person would want to move it quickly and reliably, especially while avoiding obstacles like an oncoming car.”

The market for BCI devices is ripe. It has been ripe for many years and startups are already present in most imaginable applications. Companies abound in health, scientific research, digital art, gaming, military applications, edutainment, ICT and even economics is not left out.

In 2008, the world saw the first commercial headgear initially priced at $300. This BCI device was marketed for gamers; it could respond to a wearers emotions and insert emoticons (ϑ, Λ) for a player’s character or whilst chatting. It could even make objects in a game move, but this is highly limited to up or down movement. Not the most exciting gameplay!

Right now the technology is limited. Two major problems are the reliability of interpreting users’ brain commands and the rate of communication. Mr Falzon’s work will help increase reliability, while Ms Camilleri’s method could identify patterns in real time. Imagine a wheelchair user is trying to move left or right with thoughts alone. The person would want to move it quickly and reliably, especially while avoiding obstacles like an oncoming car. There are more invasive approaches that would add commands and reliability. However few people want electrodes stuck under their skin or bored through their skull just to play a computer game. Such invasive procedures also spiral costs beyond the reach of those that need it the most: the aged, disabled or wheelchair bound individuals.

The best solution seems to reside in perfecting the physical devices and the computer algorithms needed to interpret the brain patterns. Once these are developed, people with limited movement could wear a cheap headset and navigate the web easily. They could open doors and curtains by looking at them. Change TV channels without needing to press a remote. Or, move an online avatar, like in Second Life. Simply put, they could lead an easier and better life. Inevitably these technologies will enter the commercial market. Who wouldn’t love to play puzzle games simply by looking at a door, or thinking that it should open? Mr Falzon finished off by saying that “for healthy users within 5 years we’ll see reliable systems” — I cannot wait for that future.

A shorter version of this article first appeared in TechSunday, the technology supplement of The Sunday Times.

Find out more:

– The first developer of a commercial BCI device: http://www.emotiv.com/

– Good articles on the assistive benefits of BCI devices: Science daily: http://tinyurl.com/braincompint AND The economist: http://www.economist.com/node/21527030 AND BBC (Video): http://tinyurl.com/bbcbrainpower

– Slideshow on “The marketability of brain to computer interfaces”: http://tinyurl.com/prezimarket

– Prof. Kenneth Camilleri’s team latest scientific articles: http://tinyurl.com/sleepeeg

AND

http://tinyurl.com/motorimagery

Comments are closed for this article!